Where are the weaknesses in large language models? Do they have predictable failings? Can I (playground bully style) call them glorified auto text, a stochastic parrot, a bloody word calculator!

Are Large Language Models glorified auto-text? or are they on the road to intelligence? If you had asked this question two years ago, most AI experts would have laughed you out of the room. Now the answer is more uncertain.

Glorified auto-text?

As an oversimplified summary, Large Language Models are neural networks trained on massive amounts of text data. Given a piece of text, you black out some parts of text, then you have the neural network try and predict the words that were blacked out.

For example: what am [ ] [ ] to say? It’s basically a big fill in [ ] blank game.

This predict-the-next-word type of training is why some people would describe Large Language Models as glorified auto-text… just a stupid statistical prediction machine… a stochastic parrot… parrot, or a word calculator. Do these names have credence? Can we file all of AI’s achievements under “not-actually-smart”? Even now, with very impressive LLMs like GPT4?

The Bad glorified auto-text argument

The AI hype crowd often goes over the top… but so does the anti-AI hype crowd.

You will often hear: It just uses statistical prediction! Checkmate!

But As if it is NOT obvious that statistical prediction creates methods create an inferior form of intelligence. Is But is a world model based on statistical prediction predicting statistical probability inferior? Well, inferior to what exactly? The glorified auto text viewpoint holds is holding human intelligence high as something different. But this is not the leading theory in the world of neuroscience. Currently the top theory that I have read about is that the brain is bootstrapped by… wait for it… predicting the next step in various sequences. Read “On Intelligence” by Jeff Hawkins.

But let’s say there really is some magical difference between AI’s the statistical prediction method of intelligence and human intelligence. Does it matter? Perhaps AI is closer to slime mold intelligence then human intelligence… but even so… so slime mold intelligence is still intelligence. If these models are responding to situational inputs with effective outputs and continue to improve at this, the effect is the same. Taking a given input situation and responding with an appropriate output in order to move towards some goal is intelligence . From the outside, it does not matter how the AI got to its answer. . We are in a world where an AI, given proper prompting, can create improved start creating more efficient and effective algorithms, medicines, math theories, etc. Calling it names (a ( a stochastic parrot) does not change the fact that its intellectual abilities are already changing the world.

To me, it is as if this glorified auto text crowd is arguing that airplanes can’t fly because their wings don't flap.

The good glorified auto-text argument

The best argument against LLM’s intelligence is, in my opinion, that they do not have accurate world models and they are still stuck optimizing low-level goals (like predicting the next word). really have an accurate world model. This is NOT to say that they do not have a world model… it’s just that their world model is entirely formed through text and it is not well structured.

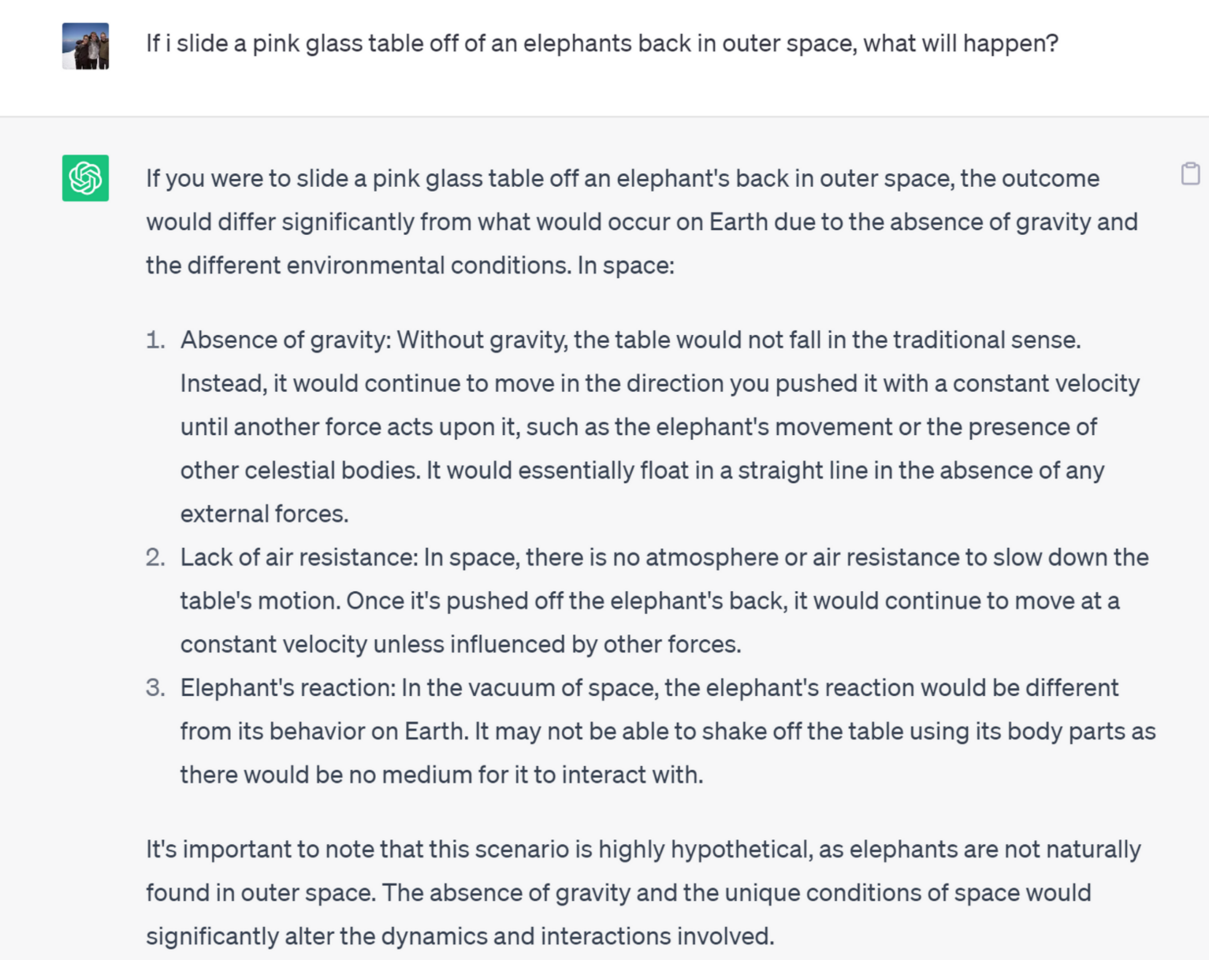

Let’s start with world models. LLM’s have networked associative knowledge about the world. For example, an LLM may have a strong associative connection between the words “deforestation” and “human activity.” This is a form of knowledge about the world. This knowledge about the world is not grounded in any sensory feedback. These models do not have any vision (yet), hearing (yet), real-world touch feedback (yet), etc. Instead the LLM’s world model is entirely formed through learned connections in textual data. LLM’s only “know” stuff based on forming predictive patterns and associations between words it has seen in its dataset. Given this… it is amazing what LLM’s do seem to “understand”. In the below query, I try to throw chatGPT off by asking it something it would never have seen in its training data.

The first point is easy to understand. These LLMs do not have any vision (yet), hearing (yet), real-world touch feedback (yet), etc. They do not have grounded sensory feedback of the real world and so of course their world model is not accurate. With this constraint there will always be many things about the world that it does not understand in any grounded way. Having said that… it is amazing what it does seem to “understand”. In the below query, I try to throw it off by asking it something it would never have seen in its training data.

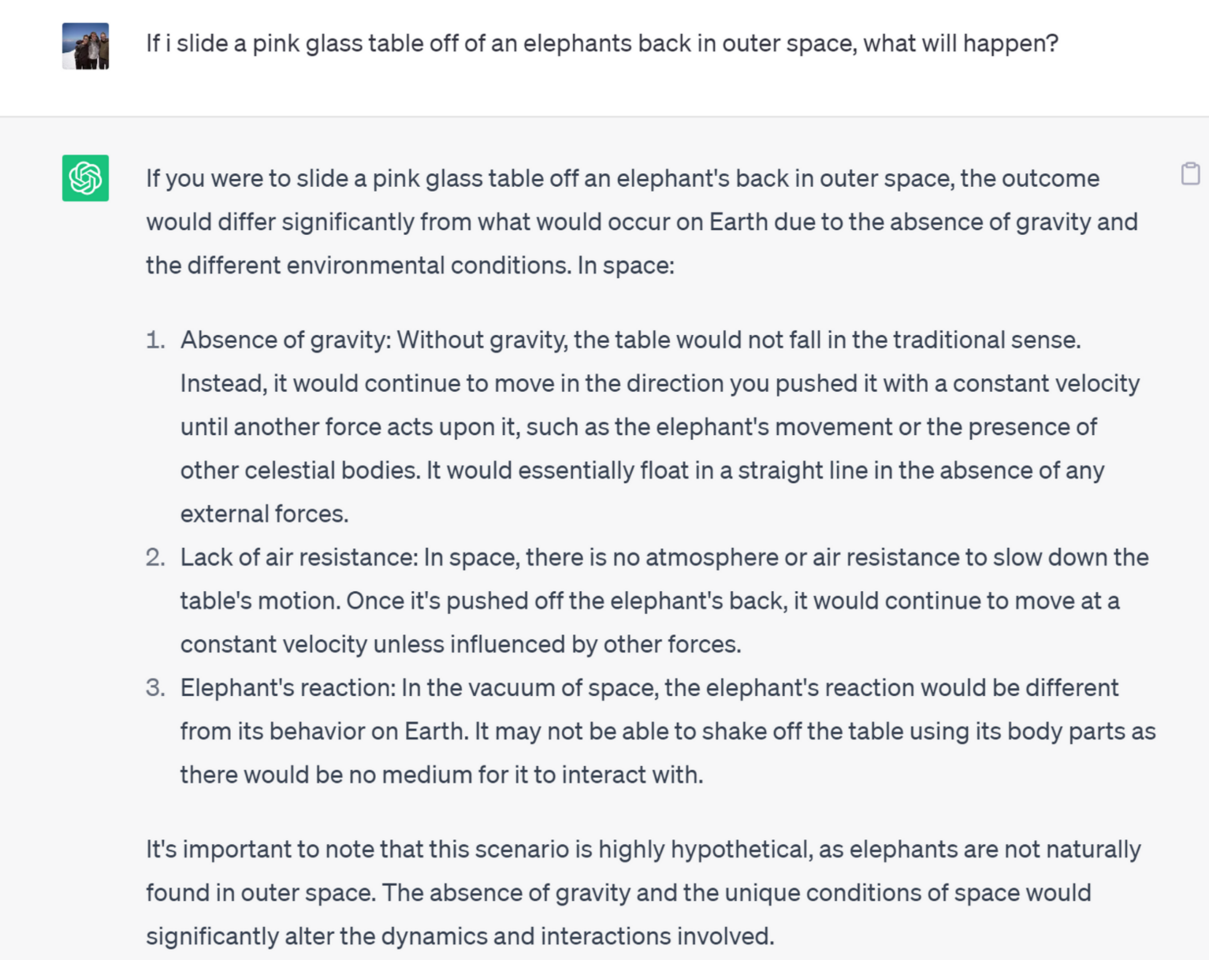

On top of LLM world model’s being entirely derived by textual data, their world models also do not have any starting foundational structure. They are actually blank slates. This is in contrast with humans… we Ok, what do I mean when I say LLM’s world model is not well structured? Well human’s brains do not start as blank slates. We come with many inbuilt structures and assumptions. Human’s brains growth patterns embed fundamental concepts into our brain. assumptions that LLM’s have none of. For example, a basic structure of our brains is that we constantly loop over information. Where information pathways are revisited over and over again. Current LLM’s do not loop at all. Or as another example, human’s start learning basic fundamental concepts before learning specifics. For example, we seem to understand object permanence before we know the names of any particular objects. Babies know that objects are solid, that they fall, that they do not mysteriously disappear or teleport etc. Large Language Models have no such in-built structure.

Large language models have no such foundational structure. They only form connections in order to better optimize for their goal: predict the next word. All networked knowledge is in service towards that low level goal.

I can only assume this causes problems… but what problems? What are the real-world consequences of this lack of inbuilt internal mental structure? Well its hard to describe, but if you play with these models enough you will see a pattern. While LLM’s can produce damn good text on practically any subject, there are some overlaying coherent creative structures it cannot generate. In these instances, it falls back on the generic. On whatever it has lots of data on. To understand what I am saying, let me show you an example with an image model. This was given to MidJourney.

Without this underlying abstract structure, it’s amazing that LLM’s work as well as they do. In predicting the next word, they have to learn the connections between words, with no inbuilt abstract rules, they only have the text data at hand.

So what? What are the real-world consequences of this lack of inbuilt internal mental structure? Well its hard to describe, but if you play with these models enough you will see a pattern. While LLM’s can produce damn good text on practically any subject, they never really do so with a coherent creative vision. They always fall back on the generic. On whatever it has lots of data on. To understand what I am saying, let me show you an example with an image model. This was given to midjourney.

Prompt: Turn the Mona Lisa mona lisa upside down.

Yep didn’t work at all… and I tried this with many different prompting methods. None of them worked. Turning something upside down is a rule that requires understanding space at some fundamental level. Midjourney has no overlaying structure that allows it to have that understanding of space. It simply produces artwork where the various parts of the image are in coherence with each other, and the text label makes good enough sense.

In the world of LLM's there are a couple of examples of this. You can structure sentences to go against the logical answer.

In the world of LLM’s such clear examples are harder to find. You will simply notice that the LLM is conforming to generic sentence and paragraph structures. Trying to get GPT4 to be cohesively creative is like pulling teeth. If you ask it to write like a famous writer, it will do the prompt lip service, but the overlaying structure of the text will not have some consistent vision.

Believe it or not... I would roll the die for a million dollars. The model definitely has the knowledge that people want money in its world model, however by playing with the sentence structure we can trick the model. It saw thousands of examples of this "however, therefore" structure and so it jumps to conclusions based on it. (this example came from this video here)

Here is another example that completely stumps GPT4. was one example I came up with to show GPT4 completely stumped.

Once again, again I asked it to do this in many different ways. It could never tell two stories at the same time… the model’s structure and the way it was trained does not allow for this. How can you predict the next word of a sentence when each alternating sentence is about something completely different? different.

These examples show that certain high-level understandings and capabilities is still beyond LLMs. If you have other examples, please add them here!

While these examples are quite obvious… most prompts do not fall into these errors. The more mundane reality is that when the LLM world model is not up to the task it simply conforms to generic sentence and paragraph structures. There are no obvious errors in this case. The output just seems bland and unimaginative. In such cases, trying to get GPT4 to be cohesively creative is like pulling teeth.

This is all to show that calling LLM’s glorified auto text is not without foundation. We are not at general intelligence yet. But could LLM’s become generally intelligent in the near future?

Turning LLM’s into Agents

If you look back at evolution, you could imagine evolution as a series of games. You have the replication game, the gather resources game, the defense game, the hunting game, the sexual reproduction game, the cooperation game, etc. (this framework is obviously a massive oversimplification). Some organisms are still mainly playing the replication game, such as viruses which just try to replicate fastest and furthest. But other organisms found a sequence of environments and games which led to great and greater intelligence. Humans being the predominant figure.

With this in mind, I suggest a framework of understanding the road to general intelligence as a series of games. The LLM training game of predicting the next word is the current favorite and has all the hype. Alone, perhaps the auto-text argument crowd has a point. Predicting the next word in a sentence will never get us to general intelligence… it does create a wacky statistical map of words that is questionably intelligent. But this is not the only game in town and these games are building on top of each other.

Consider a few of the various sub-domains of AI.

- Vision research (especially at Facebook) is segmenting pictures into hierarchical maps of objects. So that given a picture the AI can say this is a person, this is the person’s hand, this is the person’s nose, this is the person’s shirt etc. A.k.a they are currently building a vision-based world model for AI.

- Generative image, video and audio. We have all seen it. Most people worry about the fake news aspect of this… but I think they are missing the forest for the trees. This is a simulation engine; this is an imagination. We are building tools that will enable for the rapid iteration and simulated testing of ideas.

- Reinforcement Learning is enabling agents to make plans and act on those plans within simulated environments. These agents win consistently within the games that they have been applied. However, there is a strong caveat. In more complex environments these agents often have problems and create unexpected results.

It really does not take an expert to imagine putting all of these pieces together. The question is not if but when. There will, of course, be challenges, but we humans are a persistent bunch. We will keep coming up with ideas to enable these multiple facets to work together… this will continue until AI’s have become real-world actionable agents.

The question then isn’t whether LLM’s is on the road to general intelligence, the question really is: are LLMs, vision models, generative models, reinforcement learning models and more combined on the road to general intelligence?

There are a lot of people working on this combination already. We have seen ideas like AutoGPT, which gives the LLM a goal, has the LLM automatically break down that goal into subtasks, and then has the LLM attempt to perform those subtasks. This is structuring the LLM’s tasks in a way so that it acts as an agent. It is an interesting twist and is still in its infancy. We can only expect these strategies to continue and improve.

In my mind the next big breakthrough will happen when someone finds a way to combine LLM’s with reinforcement learning. While this is bound to come with a set of problems, I just don’t see us humans ever stopping. We are relentless bastards when it comes to gaining an advantage, and AI promises to provide a massive advantage.

So yeah once we find a way to combine all of these various domains and then optimize the hell out of it… I think we are on the road to general intelligence and then super intelligence. I really don’t see the counter argument. Perhaps you could argue that super intelligence is still 50 years away? (I would disagree) Perhaps you could argue that humanity is at the ceiling of intelligence? (Lol just imagine Einstein operating at 10,000 times speed with no need to eat or sleep)

What does the road to super intelligence look like?

In my mind the real question is, what type of road are we on? Are we on a short and straight road? Are we on a long and windy road? Are we on a bumpy path with sharp turns? Is there a massive cliff on one side? Are we being careful drivers?

And then… what does the end point look like? Are we truly going to create agents that act like us? Or are they going to be… weird? Is super intelligence decentralized or centralized?

Notes for editors

As this is MidFlip Merit Forge, feel free to edit, add counter arguments, etc. My vision for this page is to stay relatively simple for beginners in AI. If you have arguments that require a longer format and complexity, please create a new evolving text / topic and link to it here.

Hot comments

about anything