Abstract

Mathematical models can describe neural network architectures and training environments, however the learned representations that emerge have remained difficult to model. Here we build a new theoretical model of internal representations. We do this via an economic and information theory framing. We distinguish niches of value that representations can fit within.

We utilize a pincer movement of theoretical deductions. First, we build a set of deductions from a top-down model of General Learning Networks. Second, we build a set of deductions from a model based on units of representation. In combination the deductions allow us to infer a “map” of valuable neural network niches.

Our theoretical model is based on measurable dimensions, albeit we simplify these dimensions greatly. It offers a prediction of the general role of a neuron based on its contextual placement and the neuron’s internal structure. Making these predictions practical and measurable requires software that has not yet been developed. Validating our predictions is beyond the scope of this paper.

Top-down direction:

We build the General Learning Network Model based on simple general principles.

We deduce a definition of internal value inherent to all learning networks.

We frame internal representations in information theory terms, which when combined with our general model, results in multiple abstraction metrics depending on the representation of interest.

This allows us to define the “signifier divide” which defines the boundary differentiating different representational domains.

These representational domains allow us to define “top-down” niches of value. Where neural structures within these different representational domains are specialized towards different roles.

Bottom-up direction:

We build the General Representative Inout Model based on simple general principles.

We isolate measurable dimensions in which we can compare the input-to-output mapping of different representational units.

We take these measurable dimensions and consider the extreme poles of these dimensions.

By combining the extreme poles of these measurable dimensions, we define polar archetypical neural structures. These define archetypal types of different neural input-to-output mappings. It is unlikely, your average neuron would display such extreme features, but together these archetypes help define the range of difference.

Based on these polar archetypes we hypothesize specialized functions that fit the form. This gives us “bottom-up” niches of value.

In combination:

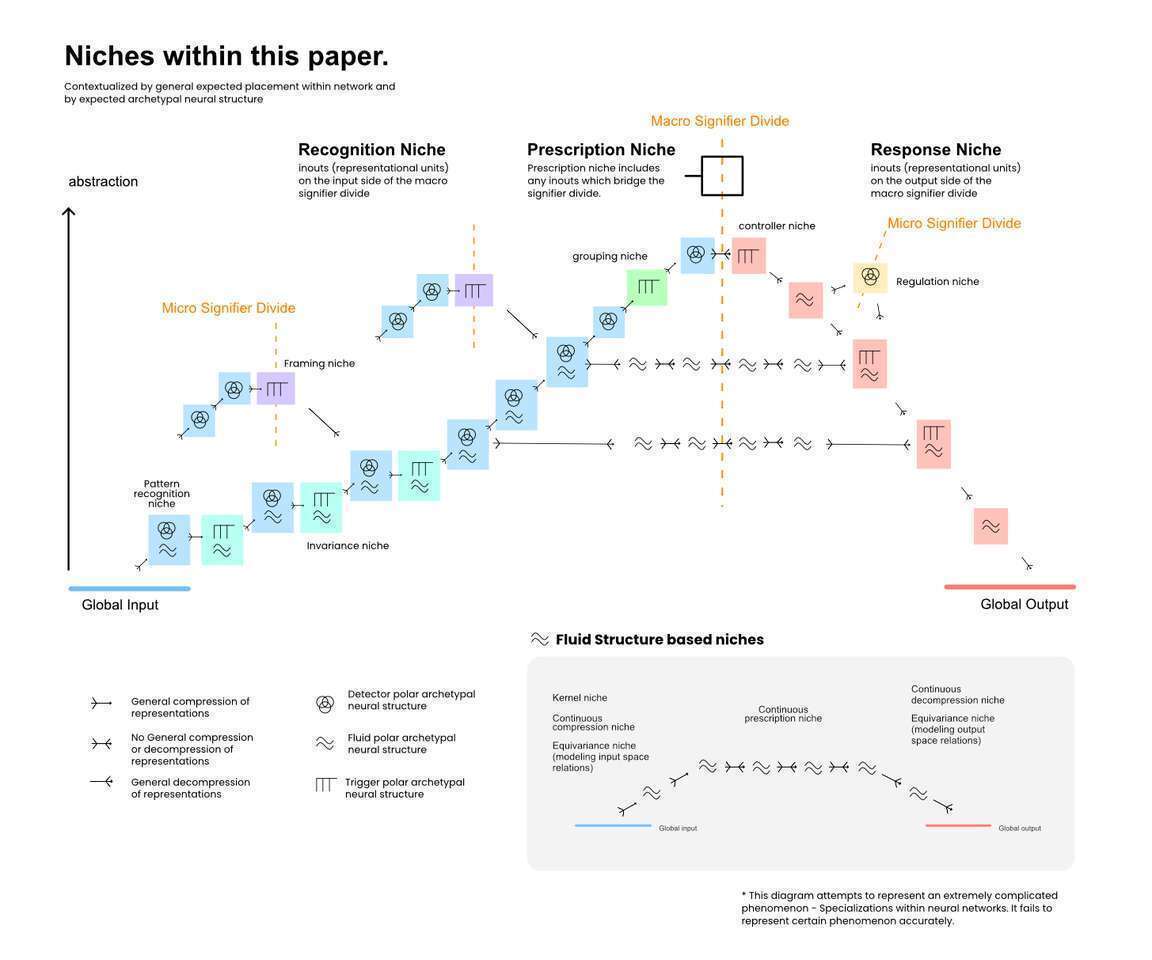

We contextualize the bottom-up niches of value within the top-down niches. This results in a series of diagrams that describe where we believe different niches emerge within neural networks.

We describe how our model affects our understanding of symbolic structures, abstraction, and the general learning process.

We highlight the measurable predictions based on the model we have laid out.

1. Introduction

Artificial Intelligence has become popular given the success of large language models such as GPT4. The capabilities of such models are impressive against any measurable test we devise. It is problematic then that our theoretical understanding of such models is questionable. While we understand much, we seem to be missing a key piece.

Mathematically, we can clearly define the network architecture. We can outline the layers, the activation functions, the normalization functions, etc.

Mathematically, we can clearly define the learning environment. We can outline the loss function, the backpropagation algorithm and cleverly mix and match all sorts of different labels.

However, defining the learned structure that emerges is a problem of a different sort. The learned weightings, their interrelations, and the representations they encode are hidden within a wall of complexity. Mathematical attempts to define this learned structure immediately run against this wall. There are simply too many variables and the human mind revolts. While some researchers may have a clever intuition, a clear language has not developed which describes these learned representations.

In this paper, we provide a different approach when addressing learned representations and their interrelations. In summary, we treat the learned structure as an economy of functional forms connecting input to output. We derive an inherent internal definition of value and make a series of deductions relating to the specialization of representations. The result is a set of niches that neural structures fall into. The final model allows us to make predictions about how neurons form, what value they are deriving, and where in the network different representations lie.

Our series of deductions come from two directions and pincer together to create our final model. The first set of deductions originate from a general model of learning networks. The second set of deductions derive from a general model of representational units we call inouts. You can think of inouts as a box that we can scale up and down and move all around a learning network. Wherever this box goes, its contents can be defined as an inout. An inout is an adjustable reference frame within learning networks. Any set of parameters that can produce a learned input-to-output mapping can be defined as an inout. Given this exceedingly general unit we can then describe which reference frames are useful. For example, there are representative units that can exist within neurons so that one neural output can mean different things in different situations [1, 2].

Our top-down deductions allow us to define value inherent to every learning network. In short, the value within a learning network is the efficacy of generating output states in response to input states, as quantitatively assessed by the networks measurement system (the loss function in neural networks). Defining value is important, as the rest of the paper rests on the assumption that neural structures specialize in order to better map inputs to outputs.

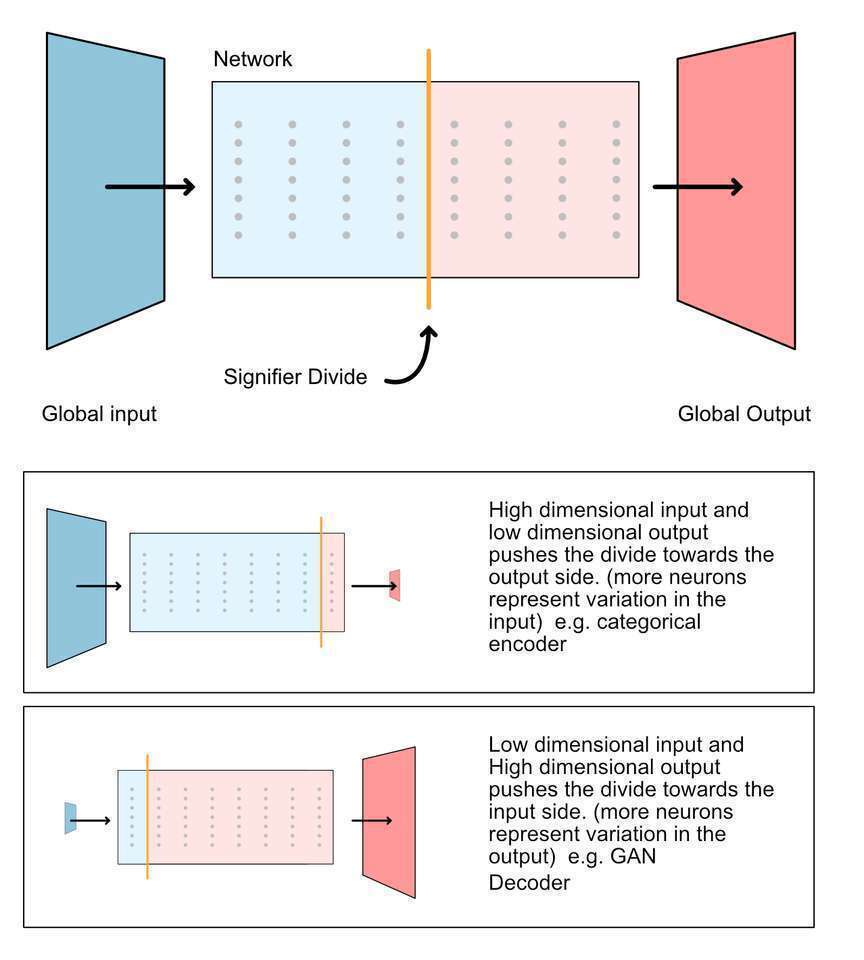

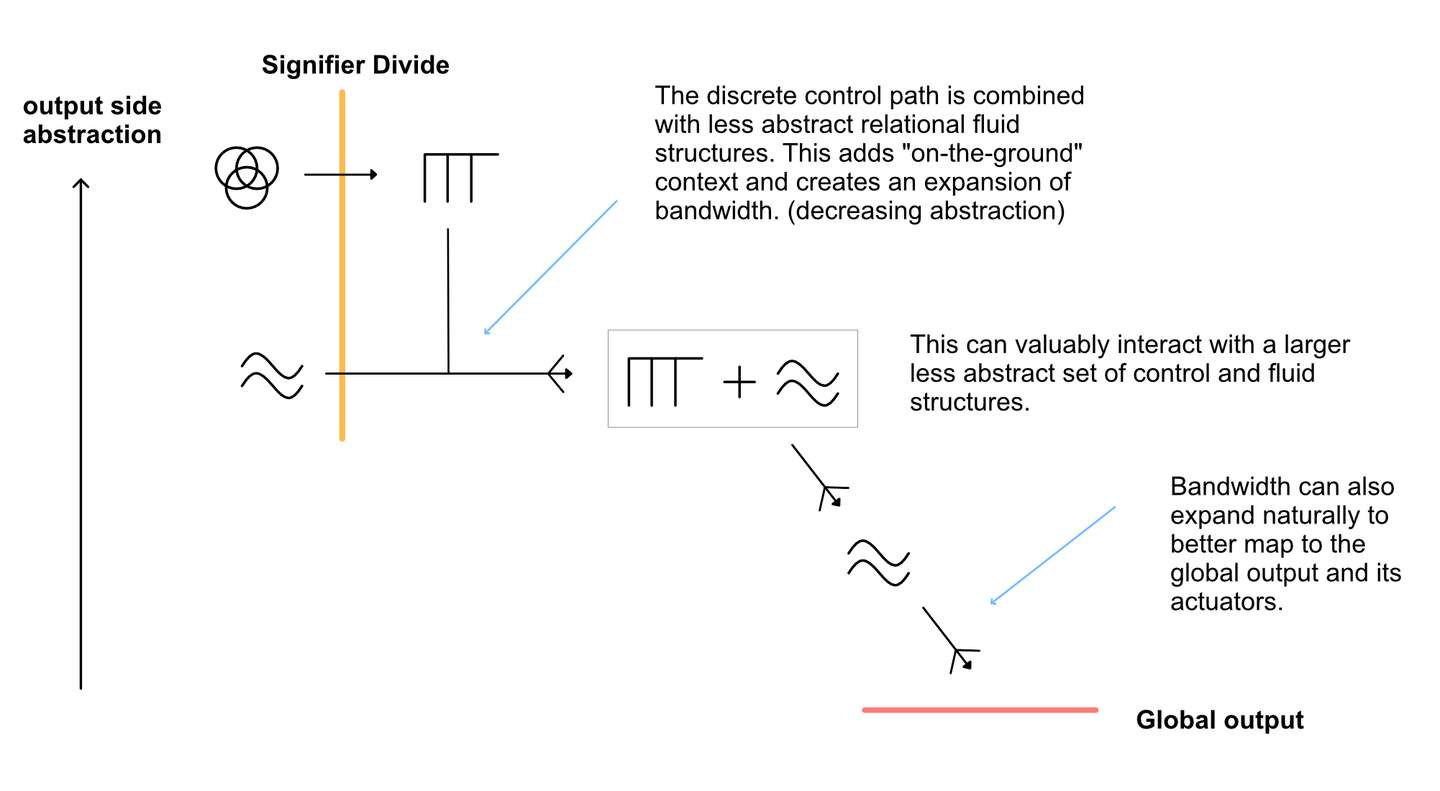

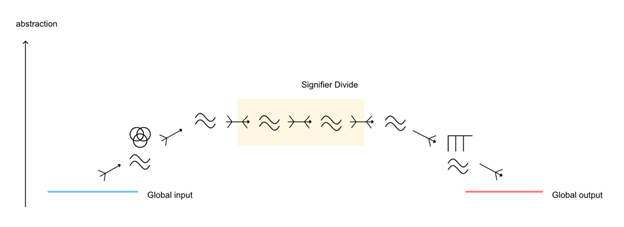

We are then able to deduce the signifier divide given an absence of loops. A division that we hypothesize to exist within many input-to-output mappings in learning networks. The signifier divide separates inouts that represent elements within the global input from inouts that represent some behavior within the global output. It divides representations of x from representations of y.

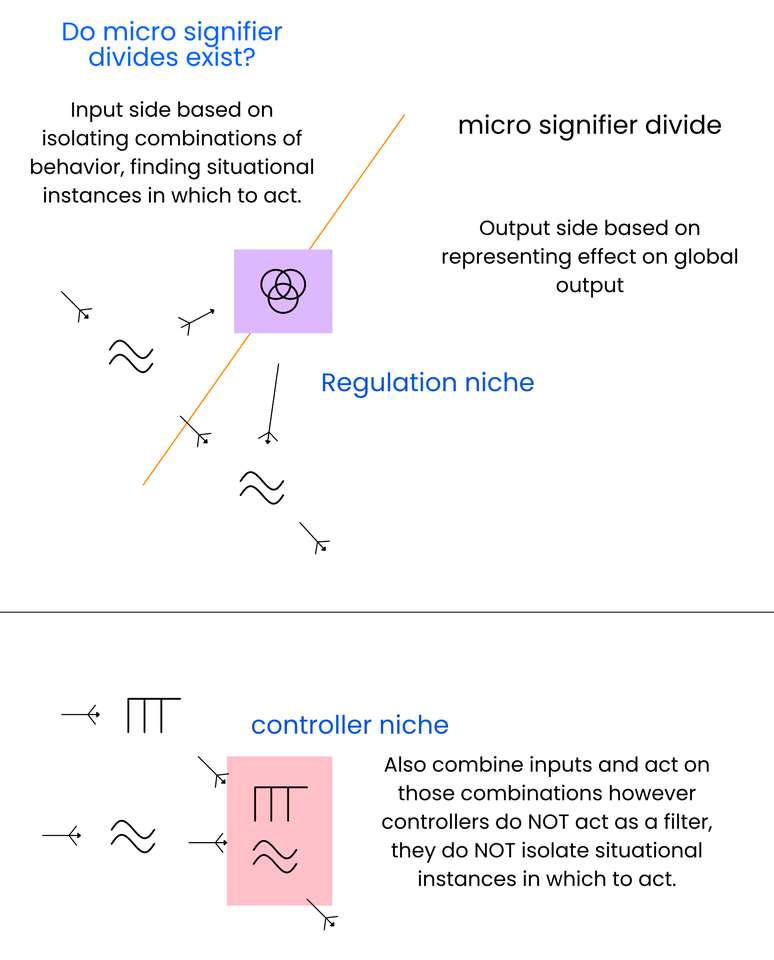

This divide is not exactly a divide. You can still consider neurons representing early input-based patterns (e.g. curve detectors) based on their effect on the network output. However, causation promotes a sometimes discrete, sometimes blurry divide in which representations at the beginning of the network best represent global input variation, and representations at the end of the network best represent global output variation. Given this divide we can describe some broad niches of value. The recognition niche, inouts representing elements within the global input. The response niche, inouts representing elements within the global output. And the prescription niche, inouts that bridge the divide, and connect situational inputs to behavioral responses.

Our bottom-up deductions start by describing sub-neural and neural level inouts. We define a set of hypothetically measurable dimensions which we believe best relate to an inout’s valuable input-to-output mapping. These dimensions are the following. First the relational situational frequency of input channels (whether neural inputs fire together or fire separately in relation with the global input). Second, the neural input relationships with each other with respect to the neural output response (Are the neuron’s inputs complementary, alternatives, inhibitory, stand-alones, etc). And finally, the change in output frequency, defined by taking the average of the inputs situational frequency and comparing that to the neural output’s situational frequency. Many of these measurements require a threshold of significance for both the inputs and outputs of the neuron in question.

You will notice that some of these hypothetically measurable dimensions are themselves multi-dimensional, after all there are multiple inputs into a neuron. Relational situational frequency of inputs and neural input relationship dynamics are in themselves complex affairs. In order to deal with this complexity, we simplify these dimensions dramatically. Future more complex models can be created simply by adjusting how we simplify these dimensions.

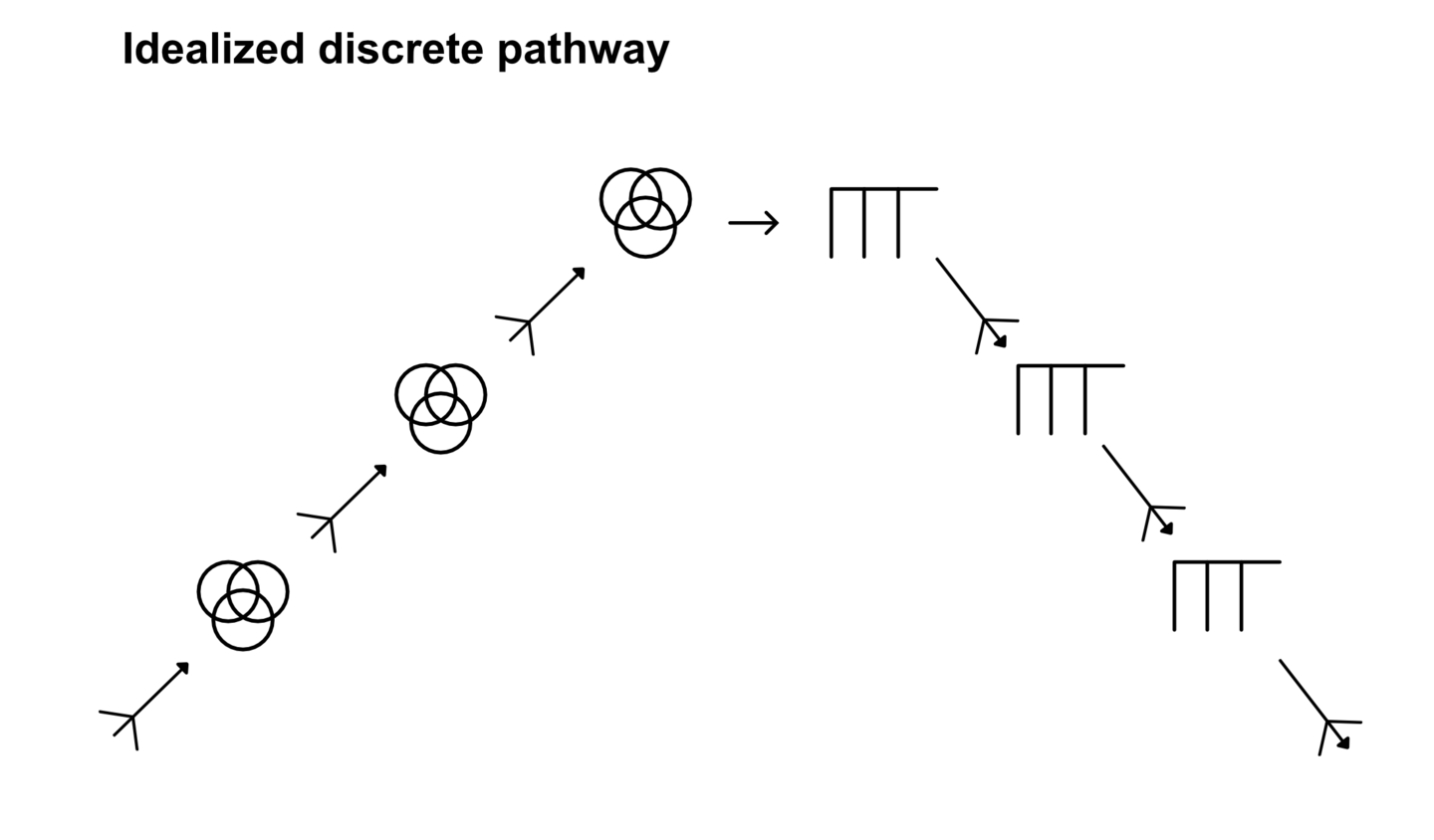

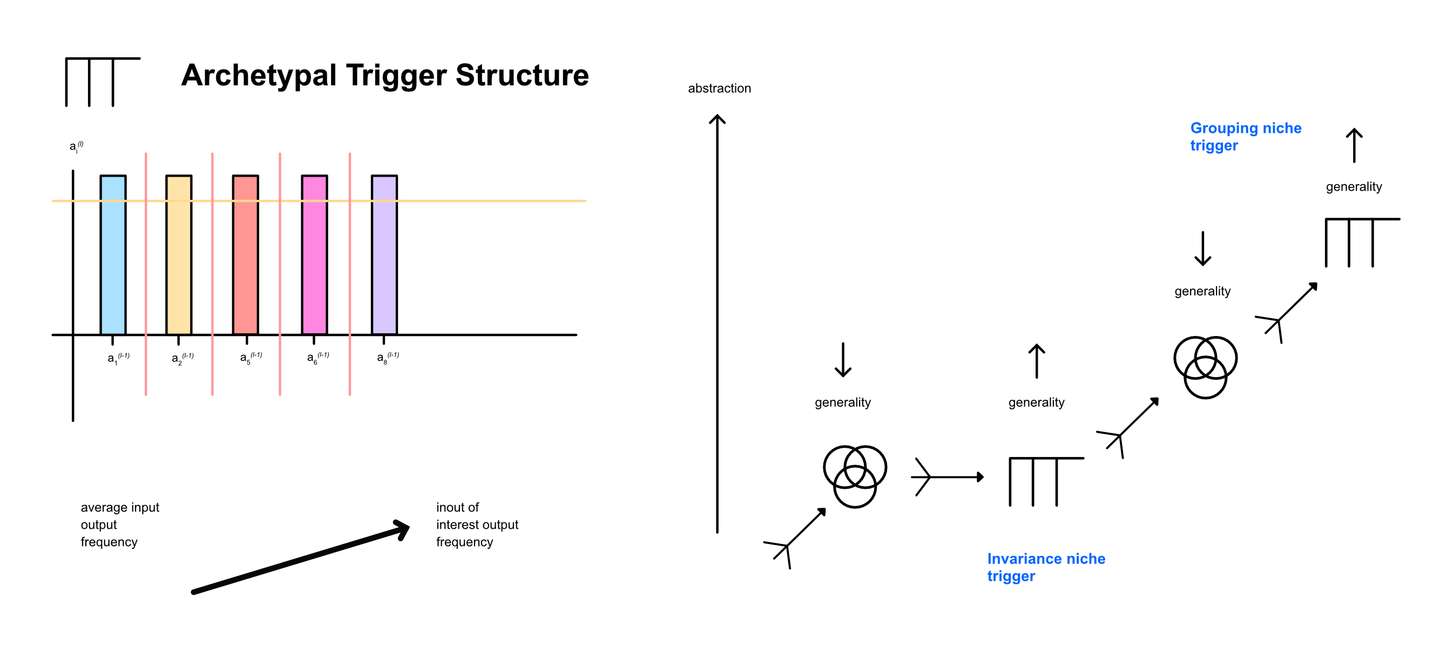

We then use an interesting theoretical trick. We take these simplified dimensions and take them to their extremes. We distinguish and define the far poles. We then mix and match these extreme poles to define distinct archetypal neural structures. For example, we can consider a neuron that has inputs which all significantly influence the output, but these inputs all “fire” separately during different input situations, this results in an increasing change of output frequency (We call this particular type of neuron the archetypal trigger structure). Many of these mixed and matched structures are not valuable under any circumstance, but those that are valuable provide us with an interesting trichotomy of neural structures.

The strategy that we just described has many caveats. These archetypal structures are based on the extreme poles of simplified measurements. Let us be extremely clear: real neuronal and sub-neuronal inouts can find a range of in-between structures. Input relationships and situational input frequencies are dimensions of difference which can get far more complicated. The archetypal structures are not meant to convey the reality of what is happening, they instead convey simplified directions of structural variation. This is rather analogous of taking the periodic table and saying the following:

1. Some elements have lots of protons, some have few.

2. Some elements have free electrons allowing for connection points, some do not.

Then we combine these simplified variations to say there are four archetypal polls:

1. Elements with lots of protons and electron-based connection points.

2. Elements with few protons and no electron connection points.

3. Elements with lots of protons and no electron connection points.

4. Elements with few protons and electron-based connection points.

Such a simplification obviously loses a ton of important complexity! Especially in the periodic tables case. However, in the case of deep learning networks, such a simplification is very useful in order to have something to grab on to. In future work, we can slowly add in further complexity so that our understanding becomes more refined.

Given our archetypal structures we begin to hypothesize niches of value that such forms can fill. We then contextualize these niches of value within the top-down framework based around the signifier divide. This provides a general map of sorts, of what representational units are doing where.

Chapter 7, 8, and 9 elaborate on the consequences of our model. In chapter 7, we discuss multi-neural symbolic structures and how continuous and “semi” discrete neural outputs work together to represent elements within relational and contextual structures. In chapter 8, We discuss our new conceptualization of abstraction. Where now within our model, we imagine multiple abstraction metrics depending on the representation we are considering. This chapter also introduces the concept of a micro-signifier divide which certain niches of representative units produce. In chapter 9, we discuss a symbolic growth hypothesis, where we contextualize the growth of different niches within the training process.

Finally, we sum up with chapter 10: measurable predictions. It summarizes our findings on mature input-to-output mappings and reiterates the different niches and how they are useful. It finishes by outlining the set of predictions that our model implies. These predictions are based on our polar archetypes defined by simplified dimensions and so are ‘approximate’ predictions with the caveat that future work can refine such predictions.

Regardless, the general methodology points to a set of measurable predictions. This set of predictions we do not believe are verifiable today, however future work will likely (quickly) be able to create and perform such tests.

We hope that this paper will be a useful building block towards creating interpretable neural network systems. We envision future software solutions which move back and forth finding clues in a sudoku like manner. The combination of these clues we hope will provide decent certainty about what neurons represent what, the value they provide, and how they are interrelated.

As artificial intelligent systems improve in capabilities, certain undesirable future states present themselves. We hope future papers about artificial intelligence include the author’s consideration of externalities and so we present our own brief consideration here. Generally, we believe that there is a danger in a misalignment between what we want and what we measurably optimize towards. This paper is not about optimization techniques, however there is a possible externality that the ideas within this paper will help others improve such techniques. Difficult to tell within the storm of future states whether this would be negative or not. Regardless, we believe that interpretability is necessary if our human civilization is to successfully surf the emerging wave of AI technology. Having the ability to see where you are going and to steer are generally good things when building cars with increasingly powerful engines (safety belts are nice too!).

Finally, before delving into the paper proper, it is pertinent to address a few housekeeping issues. First, it should be noted that this paper is (currently) authored by a single individual. The use of the first-person plural "we" is adopted to maintain the conventional tone of academic discourse. Second, due to author preference, this paper is designed to be read linearly. We address methodological issues and questions within the current context. Finally, the author is engaged in an entrepreneurial venture, building a crowd intelligence website: midflip.io. We aim to innovate how we collaborate. Midflip utilizes king-of-the-hill texts and liquid democracy to iteratively and socially refine topics. A version of this paper is posted to midflip.io as an experimental test case of an academic style paper that updates over time within this system. It is still a young venture, but a very exciting one. You can find these links below and we welcome collaboration.

2. Starting General models

This paper begins by creating a model from the most general, fundamental axioms that are widely accepted as true. While our subject of interest is the learned structure within neural networks, the most general axioms don't specifically apply to neural networks, but to their broader parent class: Learning Networks. Thus, we begin with a big picture, general model of Learning Networks. This will act as our initial top-down general model.

We will then delve into the constitute parts of learning networks and consider general units of representation. Here, however specific idiosyncrasies of neural networks become important, and so we shall describe the nature of neurons themselves, and how these idiosyncrasies relate to the general units of representation. This will create our second bottom-up general model.

Simple assumptions for subsequent discussion

Input-Output mapping – For the purposes of this discussion, we operate under the assumption that Learning Networks establish a functional mapping from input states to output states, guided by a specific measurement function. This excludes odd scenarios such as a network that is always rewarded for producing the number 42, irrespective of input.

Network Training State: Furthermore, we generally assume (unless stated otherwise) that the Learning Networks under consideration are not only trained but are also proficient in mapping inputs to outputs.

General learning network model

A network in its broadest sense is a collection of interrelated entities. For example, the solar system is a network, as each body of mass is affected by the others via their gravity bound relationships. But the solar system is not a learning network. There is no progressive learning that changes the relationships between the bodies of mass.

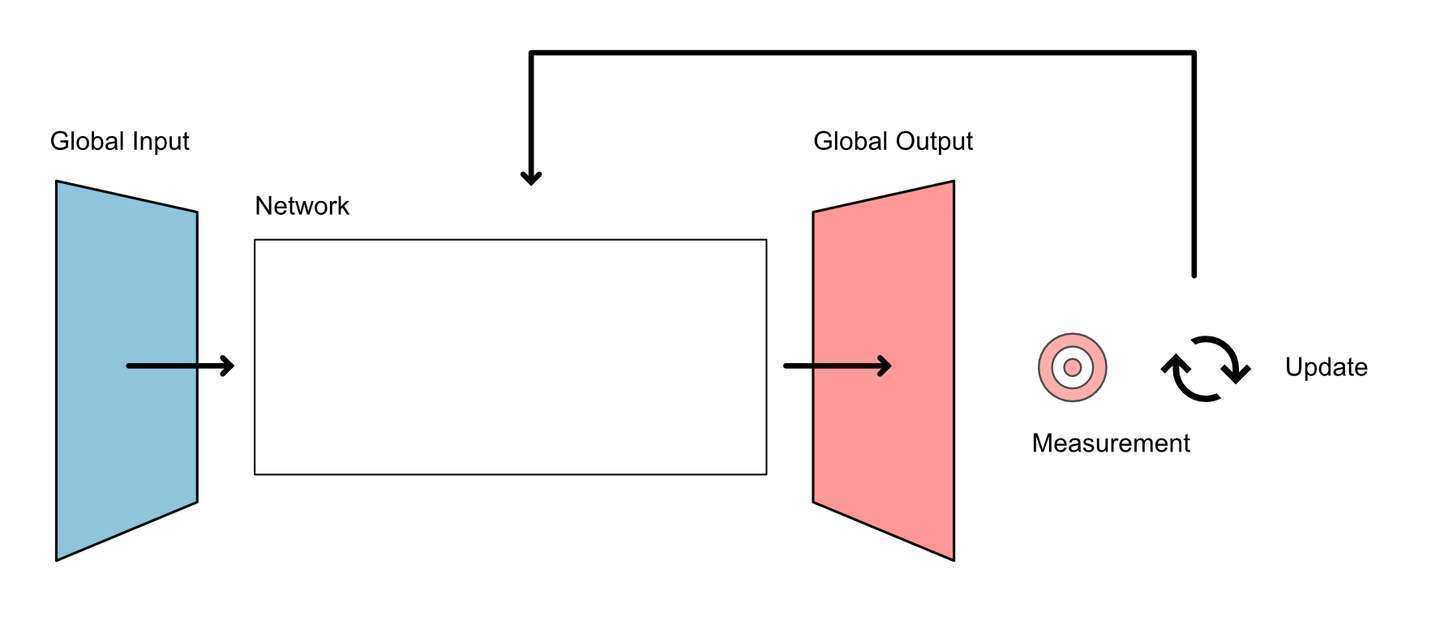

Learning networks are a set of interrelated entities whose internal makeup is systematically altered to better achieve some goal. To elucidate the concept of learning networks, we employ the INOMU framework. INOMU is an acronym for Input, Network, Output, Measurement, and Update. At a basic level, we can describe each of these as: Network receives input, network processes input, network produces output, output is measured based on some “target” state, and then the network updates based on that measurement. The steps of measurement and update collectively constitute what is commonly referred to as an optimization function. This enables the Learning Network to ‘learn’ and improve its performance over time.

Learning Networks are the parent class in which neural networks belong. Other members of the Learning network class include: the Gene Regulatory Network with evolution as the optimization function, the economy with human demand and design as the optimization function, and the brain with a complex and not fully understood optimization function.

We will refer to the input of the Learning Network as the global input. This is contrast to the input of a neuron of interest, which would have its own local input. The global input is a part of a set of possible inputs: the global input phase space. A multi-dimensional space that encompasses all possible combinations of input variables. The global input phase space is often constrained by the training set. The set of input to output mappings the Learning Network has experienced, resulting in its current capabilities.

The input into a learning network is structured by the shape of sensory apparatuses that “capture” the input. An image-like input, for example, has structured relationships between the pixels, where neighboring pixels are more related in space. This inherent structure in the input we call the input spatial structure. It captures the relationships between the “sensors” which are common among input data.

The global output does not have sensors, it has actuators. It creates change. The global output represents behaviors within whatever domain the network is operating. The global output phase space is then the set of possible behaviors the network can perform. This can then be further constrained by the training set, to represent only those behaviors the network has learned to perform.

Similar to the input, the output from a learning network is structured by the shape of its actuators which “change” the output. For example, a network which produces a sequence of data has outputs which are inherently related in temporal order. This inherent structure in the output we call the output spatial structure.

The learning network should be considered a product of its environment. You cannot consider the learning network without knowing its inputs and outputs. The dimensionality, structure and essence of the input and output should be integral to your conceptualization of any learning network. It is, for example, important to know if the network is generally expanding or compressing information.

The Learning Network itself we consider simply to have unchangeable structural components s, and changeable parameters p which update over training.

F = F(s, p)

Global Input: The data, signals or objects fed into the network for processing.

Network: The core architecture that processes the input. It consists of interconnected modules that perform specific transformations. Consists of structural elements s and changeable parameters p.

Global Output: The result produced by the network after processing the input.

Measurement: A quantitative evaluation of the output against a desired target state.

Update: The adjustment of the network's internal parameters based on the measurement.

Input/Output Phase Space: multi-dimensional space that encompasses all possible combinations of input or output variables.

Training Set: The set of input to output mappings the Learning Network has experienced, resulting in its current capabilities.

General Internal Entities - Inouts

Within learning networks are a set of interrelated entities which adjust and represent. In deep learning we generally consider artificial neurons. However, in this paper we challenge the assumption that the neuron is the best unit of consideration. This paper is specifically interested in representation, and the neuron, we will find, does not best capture the representations formed within deep learning networks.

Instead, we introduce a general computational entity within Learning Networks and call it an 'Inout'.

An "Inout" is defined extremely generally. It is a generic computational entity within a Learning Network. It is a grouping of parameters that takes in input and produces an output. You can think of inouts as a box that we can scale up and down and move all about a learning network. Wherever this box goes, its contents can be defined as an inout. An inout is an adjustable reference frame within learning networks. It’s a set of interrelated parameters which generates an input-to-output mapping.

But this definition of an inout is so general that it has an odd quality. Inouts can nest. Inouts can belong within inouts at different degrees of complexity. Indeed, the entire learning network itself can be defined as an inout. After all the learning network has its own input, output, and adjustable parameters. By this definition, the neural network itself, groups of neurons, layers of neurons, neurons, and even parts of neurons can all be considered inouts. Alternatively, to consider the biological domain, DNA sequences and proteins can be inouts, cells are inouts, organs are inouts, everywhere there are inouts within inouts!

Local Input: The data, signals or objects received by the inout of interest.

Local Output: The result generated by the inout’s interaction with the input based on its internal logic or function.

Structural components: elements within the inout which do not adjust during changing.

Adjustable Parameters: The modifiable elements within the inout that influence its behavior, such as weights in a neuron or coefficients in a mathematical function.

This extremely general definition of an internal unit may seem too general for its own good. But it has a major benefit. It does not assume any given structure to the Learning Network. It does not assume that neurons are a fundamental unit of computation. In fact, it does not assume any fundamental structural unit.

Because Inouts are essentially reference frames that can be scaled up and down and moved all about, it can sometimes get quite confusing to think about them. Some groupings of parameters are more useful to think about than others, but which ones? We will answer that question as we continue.

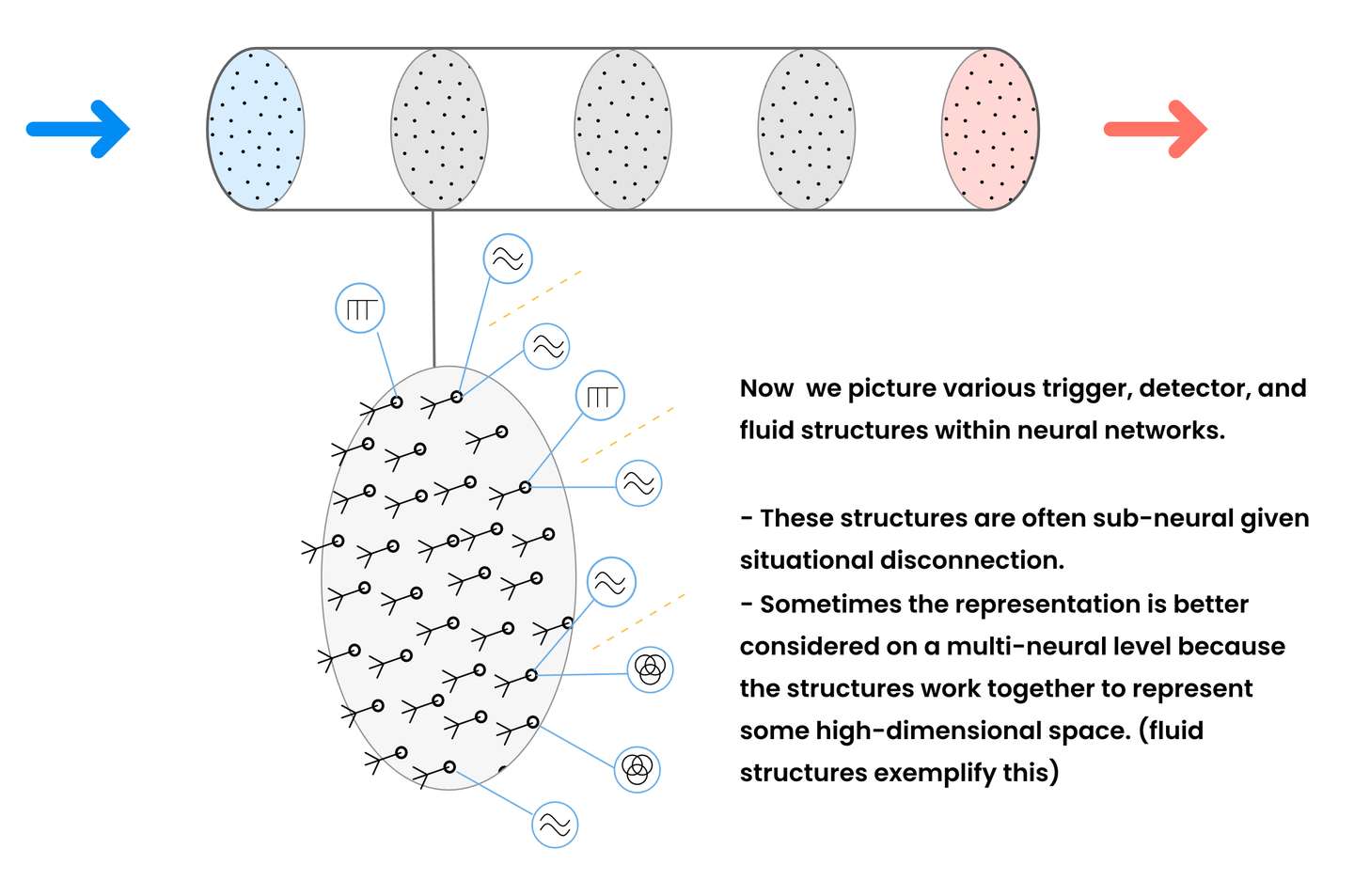

Below is an image of the main types of inouts that we will consider in this paper.

The following are terms we use when considering different types of inouts.

Neuron of interest – a neuron that we are focusing on.

Inout of interest – an inout that we are focusing on.

Parent inout – An inout that contains the inout of interest.

Child inout – an inout within the inout of interest.

Base inout – Smallest possible inout given the structure of the Learning Network.

Valuable inout – an inout whose nature has been refined by the measurement function.

Sub-neural inout – an inout that lies within a neuron

Neural inout - an inout that encapsulates all of the inputs, parameters, and output of a neuron.

Representative inout – an inout constrained by a single representation. (to be defined better soon)

Divisible inout – A definition of inputs and outputs that has separate divisible pathways within them. We should not define inouts of interest as such.

neural-output-constrained inout – an inout whose output matches the output of a neuron of interest. Generally, the input of such an inout starts at the global input.

neural-input-constrained inout – an inout whose input matches the input of a neuron of interest. Generally, the output of such an inout ends at the global output.

Specific Internal Entities – Neurons

While the general internal entity of an inout is important, it is also important to consider the specific. The structure of artificial neurons plays a pivotal role in the representations they create. This structure can be explained mathematically as follows.

Nodes and Layers: A single neuron i in layer l computes its output ai(l) based on the weighted sum of the outputs from the previous layer, a(l-1), and a bias term bi(l). Formally, this can be expressed as:

Activation Function: This sum zi(l) is transformed by an activation function f to produce ai(l):

Popular activation functions include ReLU, Sigmoid, and Tanh. For most of this paper we assume ReLU.

Let’s focus on what this structure means for representation. We have multiple input channels all possibly conveying a useful signal, we interrelate these input channels given a set of parameters, and we produce a single output channel.

Each channel of information is a number through a nonlinearity. This number can represent many different things in many different ways. It can represent continuous variables and it can somewhat represent discrete variables by utilizing the nonlinearity. We say somewhat because a truly discrete variable cannot be updated along a gradient, which is required for most deep learning update methods. However, despite this, discreteness can be mimicked.

Note: the nonlinearity of neurons is an important factor going forward. Different activation functions can cause different types of nonlinearities. Generally, here, we assume the relu activation function which creates a nonlinearity so that neuronal output can bottom out at zero. This can allow for a more discrete representation and output patterns. Other activation functions create different capabilities and constraints on neurons and so require different interpretations of sub-neuron inouts.

Fundamental units of representation

For a good while it was generally assumed that neurons were the fundamental unit of representation within neural networks. This was a natural assumption given neural networks are made up of… neurons.

However recent studies by Anthropic call this fundamental unit of representation idea into question. They have shown that neural networks can represent more features than they have neurons, sometimes many more. They look at this phenomenon via studying vectors representing layer level outputs. They show that these vectors can have more features than dimensions.

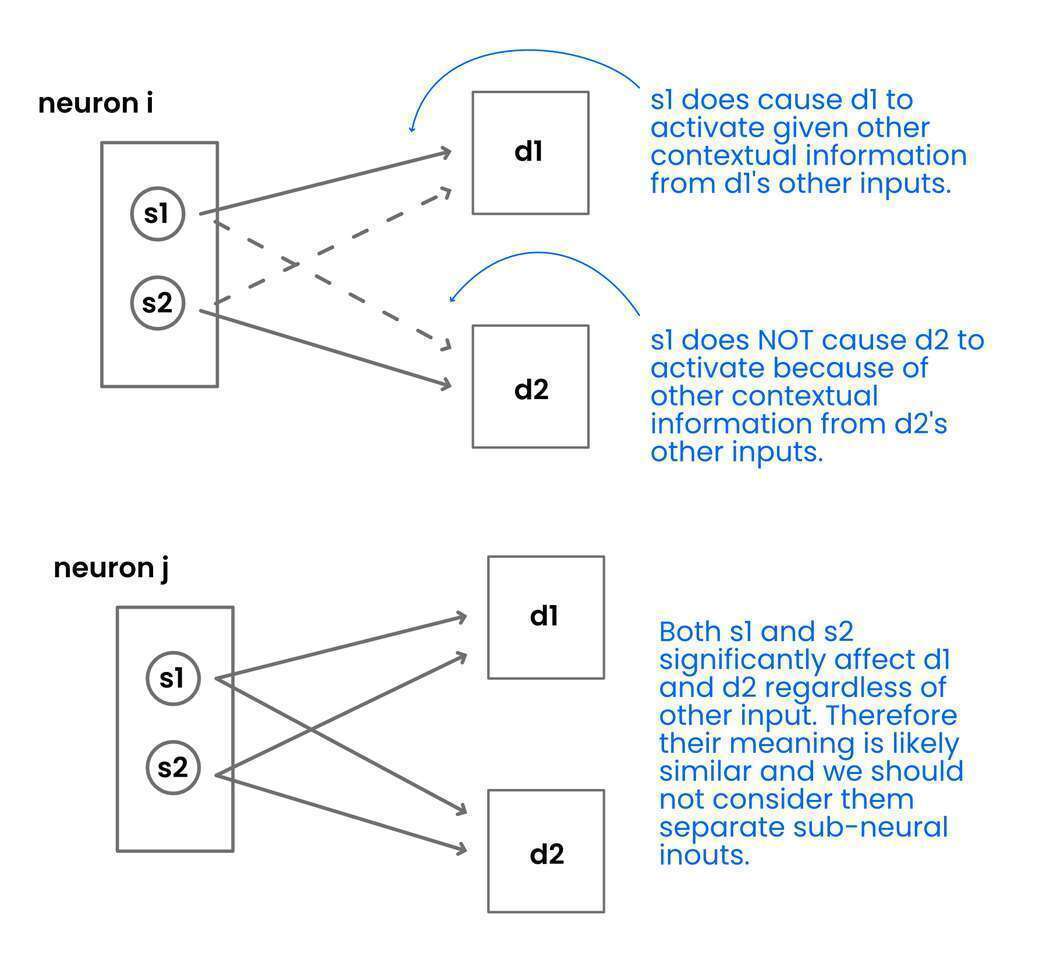

So, a feature can be detected by something smaller than a neuron. Let’s use our new inout definition to show how this may be true. This is actually rather trivial. Consider groupings of inputs and weightings within a neuron that independently activate that neuron within different contexts.

In the above example we imagine two inputs within neuron i, which with their weightings can together cause neuron i to produce an output. In such a situation we have two inputs leading to one output, with two weights and a bias as adjustable parameters. We now are imagining a functional inout smaller than a neuron.

The other inputs and weightings may interfere with this of course! A neuron only has one output signal. But in this case, we are imagining that the inputs a1 and a2 occur independently of other inputs given the situation / global input. In this particular context, a1 and a2 trigger the output alone, and within this particular context, the output is understood in this particular way. In a different context, the output may be understood differently.

For example, let’s imagine a single neuron in a visual model that recognizes both cars and cat legs. This can happen especially in convolutional networks because cars and cat legs would not occur in the same pixel space in the same image. They are contextually isolated. The output of this neuron is understood by other neurons because they too are activated by the input and have contextual knowledge. If other activating neurons around are cat face, body, and tail then the neuron of interest is considered to represent cat legs.

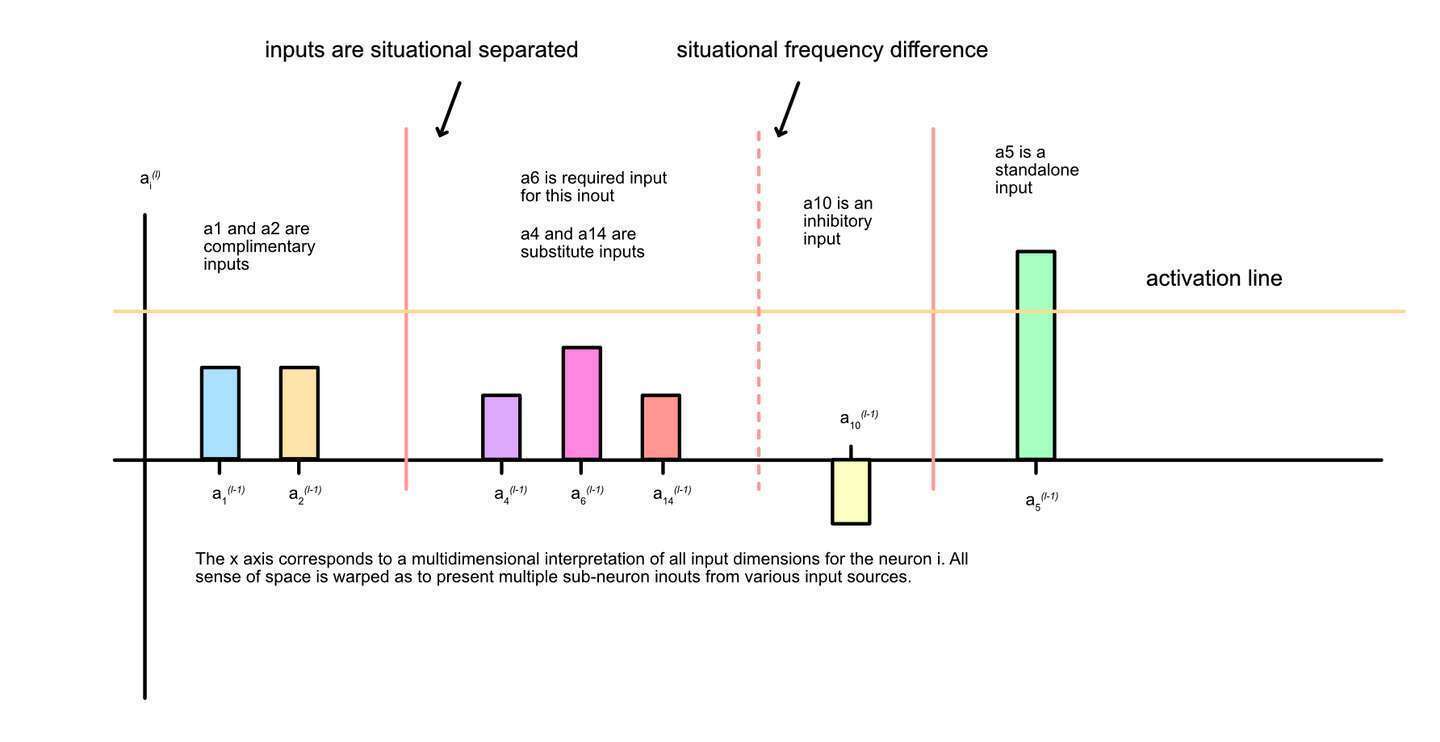

This neuron of interest has multiple inputs, some are situationally connected, and some are situationally separated. These are useful simplifications. Situationally connected inputs are inputs that fire together - they impactfully vary together during the same input situations. Situationally separated input are inputs that fire separately – they impactfully vary during different input situations. We will define this with more rigor later.

This situational separation means that smaller than neural inouts can create meaningful outputs given context. Given situational separation, sub-neuron inouts can have their own unique combination of inputs that trigger a shared output response. Where this shared output response means different things given context.

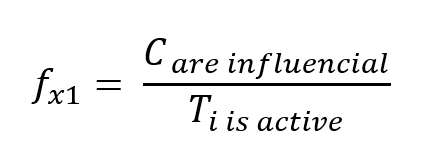

This situational connection / separation are two poles to explain a dimension of difference. In reality we should consider the situational frequency of inputs. How often an input significantly influences the output of the neuron of interest. Then we should imagine the shared situational frequency between different inputs. It may be useful here to imagine a clustering algorithm that groups inputs which tend to “fire together”.

Situationally connected – Inputs to a neuron of interest that significantly influence the neuronal output at the same time. The inputs ‘fire together’.

Situationally disconnected / situational separation – Inputs to a neuron of interest that do NOT significantly influence the neuronal output at the same time. The inputs ‘fire separately’.

Situational input frequency – A measure of how inputs significantly influence neuronal output in time (i.e. within the dataset).

Output frequency – A measure of how often the neuron of interest produces a significant output.

You will notice that these definitions all require us to define “significant output” or “significantly influence”. This is difficult because different neurons and contexts may define “significance” differently. What a neuronal output represents will affect what we should consider significant. For example, there should be a marked difference between information channels representing discrete vs. continuous representations because the discrete representations likely make use of the non-linearity. Regardless, defining significance requires specific work on specific neurons and is a significant problem.

Sub neural inouts change how we conceptualize representations within a neural network. We can now imagine neurons that have multiple functional representations within them. A single neuron’s output may mean many different things in different contexts – whether continuous or discrete in nature.

In the below visualization, we get creative with space. The y-axis is the activation of the neuron, while the x-axis is an imaginary amalgamation of multiple input spaces. We use bar graphs where each input’s contribution to y output is depicted separately. We consider an average excitatory scenario for each input and weight and gage how such excitatory states work together to cause the neuron to produce output.

In the diagram we pretend we know which inputs tend to fire together within the environment – i.e. are situationally separated. We then note how situationally connected inputs need to work together to cause the neuron to fire. Assuming discrete representations (an assumption we can relax later).

You can see how this visual depiction highlights the relationships between inputs. It is these relationships that define how input representations are combined to create the output representation. To be clear, this and any visualization fails to capture high dimensional space. In reality, each different ranges of global input would create different input contributions towards neural activation.

We can imagine all sorts of different combinations of input. If two inputs need to work together to cause a positive output, we can describe them as complimentary inputs. If one input can be substituted for another and still cause a positive output, we can call those substitute inputs. If one input is required for neuronal output, we can call that a required input. If one input inhibits neuronal output, we can call it an inhibitory input.

Finally, we can consider standalone inputs. A standalone input is an input that alone can activate the output of a neuron within common training set conditions. This would be the smallest, least complex inout we can imagine within a neural network. A base inout. The base inout is the least complex sub-system that still has the qualities of an inout. Complexity here is measured simply, as the number of inputs, outputs, and adjustable parameters. These standalone inputs have one associated input, weight, and bias.

Base inouts with standalone inputs are interesting in their own right but notice that these base inouts are hardly the basis of representational computing. Importantly the sharing of the output and bias with other inouts means that these base inouts are not fully separatable.

When situational separation is NOT connected to a different representation

We could imagine an algorithm going through each neuron in a network and isolating different input combinations that together can cause a positive output. Such an algorithm would find all sub-neuron inout candidates but many of these candidates are likely false flags. We do not want all combinations of inputs that create a positive output. We want the combinations of inputs and ranges of input which actually occur in the training set and consequently are adjusted by the optimization function. We would need to know which inputs are situationally connected.

This hiccup makes measuring and isolating real sub-neuron inouts for interpretability purposes much harder. We need to understand the context in which different inputs fire. We need to know which inouts actually represent input to output mappings that have been refined by the optimization function. The algorithm would need to keep track of input training data and keep track of which input combinations for each neuron are situationally working together to create a positive output.

However, we have a larger problem. Even if we group inputs into situational groupings that does not mean that we should consider the groupings a part of a sub-neuron inout which represents a single thing! Consider for instance the following example.

All the inputs are situationally separated, all the inputs can individually cause the neuron to produce its output. So, should we consider these five sub-neural inputs all producing outputs with different representational meanings? Or perhaps the output of the neuron has one representational meaning and five separate situations “activate” that representational meaning. For example, what if the representational meaning is “produce y behavior” and five different input situations utilize y behavior. In that case, because the output represents the same thing every time, one should consider this a single representative inout – with one single representational meaning and five situational triggers.

This shows that we cannot just define representational inouts based on situational separation. Sometimes situational separation is put to service a single representation.

General Representational Inout Model

Combining our previous discussions of inouts, neurons, and situational separation gives us the General Representational Inout Model.

Inout’s are a flexible reference frame. While you can define inouts via any input to output mapping, we try and define them so that the output matches how representations are stored within the network. This is not easy. A theoretical rule is the following:

A Representational inout is defined by an output which represents a defined set of variation that is differentiated from surrounding variation.

This rule is based on the fundamental definition of representation. To distinguish and partition a part of reality and label it. This distinguishment is not constrained by structural considerations, which is why we have defined inouts as we have.

As we have seen, sub-neural and neural inouts can define a differentiated set of variation. Keep in mind however that larger inouts involving multiple neurons are no exception. For example, we can have large groupings of neurons which together represent the high-dimensional spatial relationships on a chessboard. The output of that grouping is a part of a “defined set of variation that is differentiated from surrounding variation.” They represent a larger relational context.

When it comes to sub-neural and neural inouts we have seen two important rules when it comes to isolating representational inouts.

The situational connectivity of the inputs is an important clue to whether or not neural outputs have multiple distinct representational meanings.

There are circumstances where situationally separated inputs can still be connected to the same representational output meaning.

Practically defining representative inouts is not easy. However, as we continue with this paper, we shall find other clues which will help in this effort. We will explore how to distinguish inouts based on the value they derive from the measurement function. This will provide further clues. In combination we hope these and other clues all together can create a sudoku style situation where an algorithm can jump and hop around a deep learning model and isolate representational meanings.

Summary of our general models.

We now have our general model of learning networks. We have defined learning networks as a set of interrelated units whose internal composition changes to better fulfill some measurement function. We summarize that into INOMU, where the network receives input, network processes input, network produces output, output is measured based on some “target” state, and then the network updates based on that measurement.

We have defined inouts. A computational entity which doubles as a flexible refence frame. An inout takes inputs and produces output and has adjustable parameters and structural features. The whole learning network can be considered an inout, the smallest single parameter input to output mapping can be considered an inout. The inout is an extremely flexible and general concept.

We have defined neurons. The specific internal structural element within neural networks. We noted that neurons have multiple inputs and one output fed through a nonlinearity.

We then found that neurons can have multiple inouts within them, where the neural output means different things depending on the context. We also looked at how different inputs can have different relationships with each other in relation to how they cause the neuronal output to activate. We introduced the concepts of situational separation and situational frequency. Finally, we added the curve ball that some situationally separated inputs are still united under a single representational meaning.

3. Value within a learning network

In this section we make a series of deductions grounded in the general learning network model. Let’s begin.

Deduction 1: Value is inherently defined within a learning network.

A Learning Network ‘learns’ as it iteratively improves its ability to create a better output, given input. Within this system a ‘better’ output is always defined by the measurement system. The measurement system sets the standard for what’s considered ‘good’ within the context of the learning network. Any change that improves the output’s ability to match the target state can be defined as valuable.

Value within a Learning Network = The efficacy of generating output states in response to input states, as quantitatively assessed by the network’s measurement system.

In this context, an "effective" output state is one that aligns closely with the desired target state as determined by the measurement system. The measurement system not only scores but also shapes what counts as an effective output. Within deep learning the measurement system is encapsulated by the loss function.

Before diving into mathematical formalisms, it's crucial to note that this framework is intended to be general. The exact quantification of value would necessitate a deeper examination tailored to each specific measurement system, which lies outside the scope of this discussion.

Let f: X → Y be the function that maps from the global input space to the global output space. This function is parameterized by p, which represents the weights and biases in a neural network, or other changeable parameters in different types of models.

Let M(Y,T) → R be the measurement function that takes an output y ∈ Y and a target t ∈ Tand returns a real-valued score. The goal in optimization is often to maximize this score, although in some contexts like loss functions, the objective may be to minimize it.

Deduction 2: Valuable pathways and the dual-value functionality of inouts

Expanding on the idea of intrinsic value within Learning Networks, we turn our attention to the contributions of individual inouts to this value. Specifically, inouts are valuable to the degree that they help the larger network produce a better output as quantified by the measurement system.

Given our General Model of Learning Networks certain criteria are required for an inout to logically add value to the larger network.

First an inout has to be connected between the global input and the global output. Otherwise, it cannot help conditionally map global input to global output states.

To be connected between global input and global output an inout either has to connect the entire distance itself or it has to be a part of a sequence of interconnecting inouts that link global input to global output. Such a sequence we call a pathway. In deep learning, artificial neurons are organized into layers, and those layers are linked sequentially to connect global input to global output. Pathways are not to be confused with layers. Pathways run perpendicular to layers as to connect global input to global output. They are branching, crisscrossing sequences of causation starting at global input and ending at global output. To be specific, a pathway can be defined via an inout’s neuronal output. As an inout is a scalable reference frame this can include a set of neuronal output. All causational inputs which lead to that set, and all causational outputs which lead from that set can be defined as the pathway. Generally, we would want to define that set so that it matches with some representation of interest.

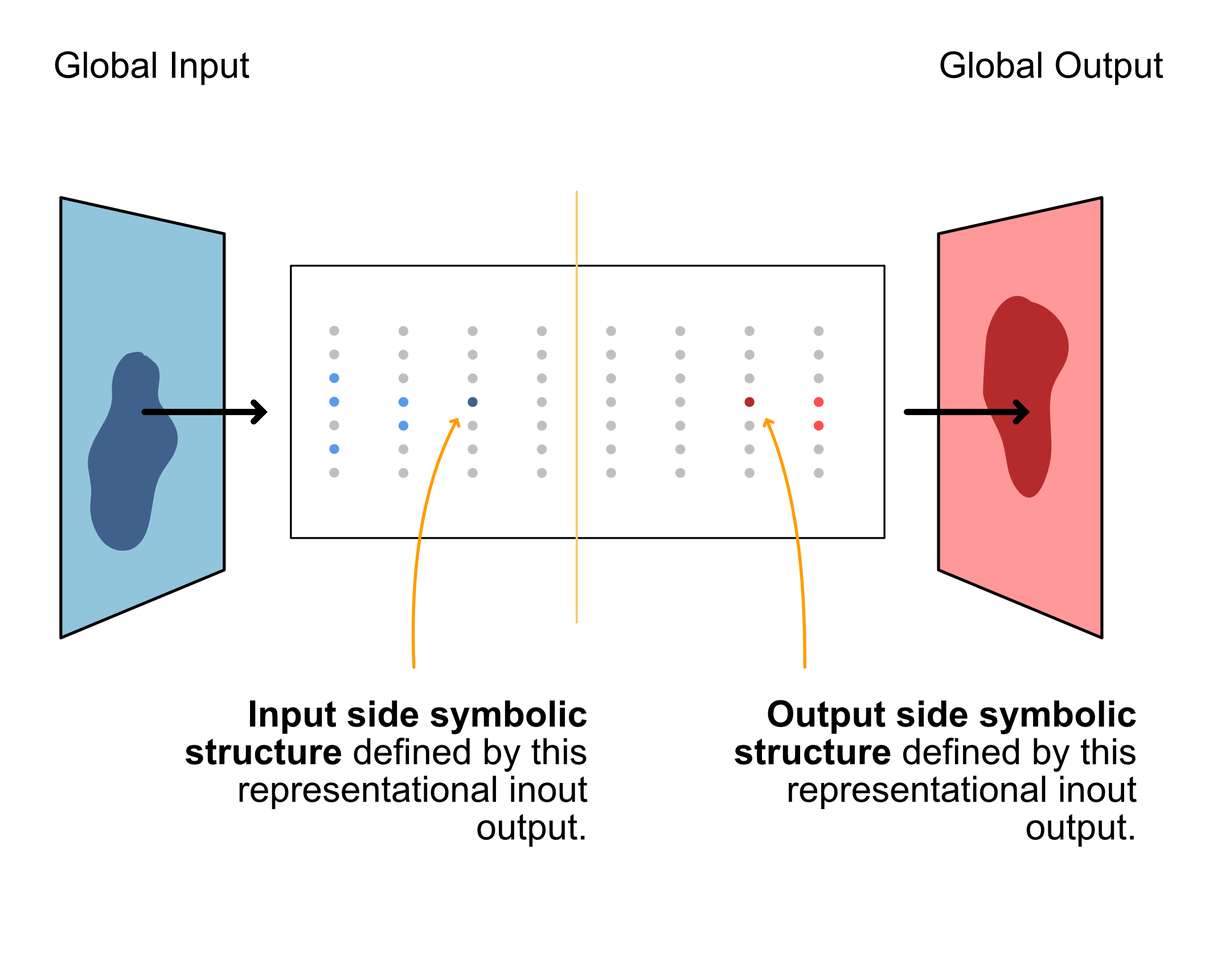

Given that an inout is connected between global input and global output, to add value an inout also has to use its position and act in a useful manner to the greater network. It must help with the input to output mapping. To do this each inout has to valuably act upon the global input via its local input and produce a local output state that valuably causes a response in the global output. This is subtle. On the input side, the inout must use its local input in a manner that enables valuable recognition of conditions in the global input state. And on the output side, the inout must conditionally produce a signal/transformation that induces valuable change in the global output.

To summarize a valuable inout must:

Be a part of a pathway connecting global input to global output.

The input side has to enable the recognition of conditions in the global input via its local input.

The output side has to conditionally produce a signal/transformation on the global output via its local output.

Inouts that don't meet this criterion would logically be unable to contribute to the network's overall value as defined by the measurement system.

Let XG be the global input of the Learning Network, and xi be the local input of the inout i. Let YG be the global output of the Learning Network, and yi be the local input of the inout i. We can then say that the inout i enables the causational mapping:

xi → yi

And we mathematically express the value of an individual inout as:

This definition of value considers how a change in an individual inouts mapping between global inputs and global output affects the larger network’s mapping, and how that change affects the measurement function. This is not meant to be measured or practical, it is simply a theoretical equation to understand that an inout has value in relation to the overall learning networks measurement function.

Deduction 3: the functional specialization of inouts

The concept of value is deeply entwined with the concept of specialization. Learning Network’s value is defined by their ability to map input-to-output as to best satisfy their measurement function. This creates a diverse and often complex set of demands on the network. To meet such demands, it becomes evident that specialized interconnected inouts are required. A blob of generally useful inouts is simply inconceivable unless the inouts are themselves a complex learning network (like humans). Even then we see empirically that humans specialize.

This phenomenon is not new. In economic and biological learning networks specialization is self-evident, given specific machinery and organs.

In deep learning network specialization has been substantiated in prior research [3, 4, 5, 6, 8, 9, 10, 11, 12]. This is beautifully evidenced by Chris Olah’s work in visual neural network models [8]. They use feature visualizations where they optimize an image to best stimulate a neuron. The resulting images offer clues to the role of the neuron. Given these feature visualizations, Chris Olah’s team-built categories of neurons within the first few layers of these large visual models. Within the first layer they divide neurons into three groupings: Gabor filters, Color contrast, and other.

They then move to the next layer where those first layer patterns are combined so that neurons recognize slightly more complex patterns.

They continue in this manner deeper into the network, identifying many different neurons and the possible features that they are tuned to recognize. This is empirical evidence of specialization within neural networks, where different neurons specialize into recognizing different types of features in the global input.

Why does specialization occur? First note that the optimization process during learning is constantly searching and selecting input to output mappings that improve the measurement function.

Given this, the concept of specialization emerges out of three rules:

-

Redundancy: Duplicate Input to output mappings are redundant and so are generally not valuable. One will outcompete the other by providing more value and become the sole inout in the role being refined by the optimization process. Alternatively, both inouts will specialize into more specific unique positions given more training.

An exception occurs when signals and computations are noisy, duplicate mappings can be valuable to increase robustness. This is common early in a network’s training.

The same functional components applied to different input to output mappings is not considered a duplicate. For example, convolutions apply the same kernel along spatial or temporal dimensions. Each step in this process is a different input to output mapping along those spatial or temporal dimensions. This is not considered redundant as they represent different things along the space and time dimensions.

Position: Inouts capabilities to map input to output is constrained by their position in the network. Their position determines what local inputs they have access to, and where their outputs are sent. In economic terms, position dictates an inouts unique access to “suppliers” of its input and ”buyers” of its output. An inouts position offers a unique opportunity for specialization.

-

Complexity: An inouts level of complexity (measured by the number of adjustable parameters) constrains the complexity of the input to output mapping the inout can perform.

Thus, inouts at the same level of complexity are competing for similarly complex input to output mappings.

Smaller child inouts within a larger parent inout define the value and specialization of the parent inout. In the same way the value and specialization of a factory is defined by the production lines, machines, and people within it.

These three rules are themselves deductions from our general learning network model. All are grounded in the concepts of input to output mappings within an inout within a network. These rules, along with the fact that the optimization process is continually searching and choosing input-to-output mappings that better suit the measurement function, explain why inouts specialize within the general learning network model.

How do we study specialization?

We hope to find niches within learning networks based on inout specialization. However, studying specialization is problematic.

Specializations are input to output mappings that provide certain “types” of value for the measurement function. We can imagine innovating along “directions of value” so that the input-to-output mapping delivers a better measurement. We shall call these “directions of value” niches. By this definition, a car is a specialization within the transportation niche.

Specializations and Niches are nestable and examinable at different reference frames. A factory may be specialized to make t-shirts, a machine may be specialized to spin thread, a worker may be specialized to move the thread to the next machine. Indeed, we can describe the worker and the machine together as a specialized unit for spinning and delivering thread. To examine specialization and niches is to examine a gestalt.

This should remind you of our inout definition. By not assuming a fundamental unit of computation we have given ourselves a unit of inquiry that mirrors niches and specializations. Inouts as an adjustable reference frame can nest and be divided up in a similar manner.

That, however, still leaves us with a difficult problem. How does one best define specific specializations and niches? How does one draw the dividing lines? This is difficult because there is no useful “a priori” solution. We can actually show exactly why that is the case. Consider that we define “specializations” as valuable input-to-output mappings in different “directions of value” (niches).

Valuable input-to-output mappings could describe any valuable grouping of parameters and “direction of value” simply reduces down to the common backpropagation algorithm where each parameter is given a valuable vector of change as defined by the loss function. This means for every definable inout, you can define a vector of valuable change with as many dimensions as there are parameters in the inout. This is not exactly useful. It would be to say that there are as many niches as there are parameters or definable inouts. This is yet another inscrutable black box. There are no clues of how to group these parameters based on this. The dimensionality of the a priori solution is simply too high.

We humans need to build the categories and dividing lines. This is not new. We have been doing this for a long time. It is just that in most domains; our senses can make out differences that we can all point to, and all agree on. For example, the organs within our bodies. Our bodies are interconnected gestalts with no clear dividing lines. However, we can point to organs like the heart and differentiate imaginary dividing lines between the heart and the circulatory network that it is apart of.

We have, in the past, also built dividing lines within more abstract domains. In these cases, we tend to utilize grounded rules and general models. For example, in biology, to divide up the evolutionary tree, we came up with the rule that a species is defined by whether or not two members can reproduce with each other. This rule fails sometimes, for example, with horses and donkeys, and with tigers and lions, but generally it creates good dividing lines that we can usefully utilize.

Top-down vs. bottom-up definitions.

In this paper we utilize two separate methods of defining niches. A top-down and bottom-up approach. Like digging a tunnel from both ends, we meet in the middle and create a more sophisticated and useful model for it.

First, we define broad niches based on our general learning network model. This top-down approach grounds categories and dividing lines within an extremely general model where we can all see and argue over the model’s axioms. This is similar to dividing up of the evolutionary tree based on the “can two members reproduce” rule. Because our General Learning Network Model is so general, we hope that any deduced dividing lines will also be generally applicable.

The second approach for defining niches is based on useful dimensions that we can measure. This bottom-up approach is reminiscent of dividing up the organs of our bodies based on visual features that we can all distinguish. However, when it comes to neural networks no such visual features present themselves. Instead, we have to get creative. We are going to find a set of measurements that we argue best describe different input-to-output mappings within neural and sub-neural inouts. We will then imagine the extreme “poles”, where we take these measurement dimensions to the extreme. Then we shall mix and match these extreme poles until we find combinations that we can deduce are valuable. These valuable extreme pole combinations we define as specialized archetypes. An idealized neural structure which is valuable because of said structure. We can then consider how these archetypal structures are valuable and thus define niches.

4. Top-Down Specializations

In the next three chapters, we begin isolating niches and specializations within neural networks. We define these niches from two directions: top-down and bottom-up.

In this chapter we move top-down and derive niches based on our General Learning Network Model. These will be broad niches that contain within them many other smaller niches. Because these niches are derived from the General Learning Network Model, they should be applicable to all learning networks, not just neural networks.

Deduction 4: Causational Specialization & the signifier divide

Representation is the modelling of external factors. Within our general model of learning networks there are two sources of external factors that can be modelled. First there are external factors presented by the global input, such as sensed phenomena. Second there are external factors that are triggered onto the global output, such as behaviors. All representations within a learning network must originate from the global input or global output, as there is no other source from which to derive these representations within the scope of our model.

In deduction 4 we claim: Given a trained learning network without loops there is a division s in which representations prior to s are best represented in terms of the global input, and representations after s are best represented in terms of the global output. We call the division s the signifier divide.

This seemingly simple deduction is of outmost importance. It presents a theoretical break from the common knowledge within the deep learning community.

Perhaps this deduction is best understood in information theory terms. Given a trained learning network, there is a division s in which the message conveyed, transitions to a different representational domain. The initial message was based on the input distribution, the final message is based on the output distribution. The signifier divide describes the point of transition.

We will discuss abstraction in much more detail in a future chapter, however for those who already understand. This means there are two distinct types of abstraction.

Input side: Abstraction involves compressing the information contained in the global input into useful features and representations.

Output side: Abstraction involves expanding a different internal representation to construct the desired global output.

The signifier divide marks an imaginary transition point between these two different types of abstraction. It is where we can best say the input situation causationally connects to the produced output behavior.

The signifier divide is not truly a “divide”. Reality is more complicated. For example, the first neurons in a visual neural network often represents curves, or edges or some such low-level input pattern. However, you could technically describe these neurons by their effect on the output of the network. Such an effect would be chaos of causational ‘if’, ‘ands’, and ‘buts’. It would be a meaningless mess of an output representation, but it could theoretically be done. Such initial neurons are better understood by representing input patterns such as curves, because the causational complexity linking the input pattern to the neural output is much (much) less. The representation is more connected to the input side.

The same is true on the output side. We can imagine a visual autoencoder neural network. In such a network, we can imagine a neuron which is linked to the generation of a curve. Whenever the neural output is triggered, the model creates a curve in such-n-such location. Now technically we could describe this neuron based on its input situation. We can list 60000 different input situations where this neuron fires, and say this neuron represents these 60000 different global input situations. But this is rather meaningless, the neuron is causationally closer to the output side. Describing it in input situational terms is a representational domain mismatch.

The signifier divide separates these representational domains. On one side, it is causationally simpler to say: this is based on the global input. On the other side, it is causationally simpler, to say : this represents a behavioral response. Given this context we can still discuss “sharp” aspects of the signifier divide. We can imagine simple robotic toys which have rules like “see big moving things” -> “run-away”. This is a sharp causational connection between a representation based on the input, and a representation based on the output. However, we can also discuss “continuous”, “blurry” aspects of the divide. We can imagine multi-dimensional continuous transformations which slowly change input space to output space.

There are situations in which we consider the divide to be absent. To elaborate, let’s call x* a representational embedding of x. So that x* directly represents some distinguished set of variation within x. The signifier divide is “absent” when y = x* or x = y*.

Consider y = x*, this is when the global output is itself a representational embedding of the global input. This is the case in categorical encoders, where the input is an image, and the output is a classification of the image. In this case, there is no value in y having a separate representational message, y itself is a representation of x.

To consider the other, x = y*. This is when the global input is itself a representation embedding of the global output. This is the case in the generator network within GAN networks. Now the input has no meaning, the GAN generator input is traditionally noise. It is the output where all representational value is derived.

These rules become important when we consider arbitrary inouts. Consider that we can divide the network by isolating inout sections. In such a case, the inout section has its own definable global input to output mapping. Does such a section have its own signifier divide? Well, If we isolate a section of the network on either side of the divide, the divide of the larger network remains within the same spot. The signifier divide of the inout section fills the role x = y* or y = x* and ‘points’ towards the where the larger network divide is. If an inout has an output which is a representative embedding of its input then we can conclude that the signifier divide of the larger network is downstream.

As a general rule, the signifier divide moves depending on the function of the network and the dimensionality of the input and output side. High dimensionality within the input may require more “work” in isolating useful representations and so the divide moves towards the output side.

Justifying the signifier divide – behavioral modularization

Let us now consider a separate intuition. One based on behavioral modularization.

First, we reiterate some of our earlier deductions. Learning networks map inputs to outputs as to deliver a better measurement. This creates an inherent internal definition of value. Functional specialization then occurs as different parts of the network are better suited to different tasks, and given a learning gradient, improve into their specialized role.

Given this, we claim that there is general value in reusing behaviors so that multiple situational inputs can utilize these behaviors. Consider for instance all the situations in which it is valuable to walk. Would it make sense to have all situational inputs linked to their own separate walking output behavior? Or would it be more efficient and effective to have these situational inputs all linked to a single walking module? Obviously, the latter.

During training it is more immediately valuable to make use of what is already available. We argue modularization of output behavior tends to be inevitable given that multiple different input situations find similar behaviors valuable. This of course does not mean that the modularization is optimal and that redundancies do not occur. Real life is messy.

A perfect reuse is not required either. A situational input that requires a slightly different behavior is generally better off utilizing a common behavior and altering it. For example, side stepping an obstacle does not likely utilize an entirely different movement module. It is likely a learned adjustment within the walking module. Creating an entirely new codified behavior requires much more structural changes and should therefore be considered less likely.

The trick here is that the mapping of global input to global output is not uniformly parallel. Where one valuable input leads to one valuable output. Instead ranges of otherwise independent areas of input space can map to similar output behaviors. This is especially true when the dimensionality of the global output is low, whilst the dimensionality of the global input is high.

This is important to the signifier divide for multiple reasons. First, it justifies the idea of neurons representing behaviors. If twenty disconnected situations connect to a neuron that triggers the “run” behavior, is the neuron representing those twenty disconnected situations or the behavior run? Certainly, good programmer naming conventions would call the modular behavior: run. Second, we see true value in modularizing representations of output space distinctly from representations of input space. Distinct output-side representations are valuable in order to be reused and reutilized.

Behavioral modularization offers a clue to where such a divide may lie. The neurons representing the output behavior may be identifiable in that they have multiple situationally disconnected inputs. The neurons representing the output behavior may be identifiable in that they form specialized groupings modulating and adjusting the behaviors within the valuable range. The clue is different input side representations derived from different situations utilizing common output side representations.

Assuming behavioral modularization, we get another interesting information theory perspective to the signifier divide. We will discuss this perspective in much more detail later. But for now for those who can follow along… We can define representational domains based on whether representations are gaining or losing information about the global input or output.

To do this start by imagining the amount of information each representation has about the global input. As representations build on top of each other to make more abstract representations, each representation is gaining more information about the global input. At some point, however, that changes. The point of behavioral modularization along the signifier divide. Past that point, each representation has less information about the global input because multiple input situations can trigger the behavioral response.

This phenomenon is mirrored on the output side. If you consider moving upstream from the global output, each representation will have more information about the global output response than the representation before it. Until you hit signifier divide. At this point each representation starts having decreasing information about the global output response because the input situation has not been fully encoded yet.

What we are seeing here is causational specialization. The representations towards the global output are in a better placement to represent the output. The representations towards the global input are in a better placement to represent the input. We are defining a fundamental rule about Learning Networks based on value.

The value of the representation is the subtle key here. If the value of the representation is derived from the affect it causes it should be considered a “Y*”, a representation of a responsive behavior. If the value of the representation is derived from the situation it recognizes it should be considered a “X*”, a representation of a recognized pattern of inputs. This division is not easy to find, and may not be discrete, however behavior modularization gives us clues.

Contextualizing the signifier divide

Major questions arise from the signifier divide. What is the nature of this divide? Is the divide continuous or discrete? Where in the network is the divide? Is conceptualizing a divide theoretically or practically useful?

These questions do not have simple answers. We shall need to continue further to answer them satisfactorily.

For now, we can provide a simplified model and intuition.

A valuable pathway must connect a valuable recognition of the global input and coordinate a valuable response within the global output. See deduction 2.

If you follow a valuable pathway between input and output, you are guaranteed to cross the signifier divide at some point. See deduction 4.

This includes pathways of different lengths and complexities. Many Learning Networks structures allow for valuable pathways with different lengths. Consider skip-layers in U-Net, or shorter parallelization pathways in the brain. Such pathways will have their own signifier divides as long as they connect global input to output.

The divide can be both sharp and discrete OR continuous and blurry depending on the functions that belie the divide.

If the network has higher global input dimensionality than global output dimensionality then the signifier divide is generally closer to the output side. Indeed, you can set up networks so that there is no effective output side because there is no need to have multiple layers of response. For example, consider categorical encoders, a common deep learning network where the network outputs a category detection such as “Cat” or “dog”. The final output in this case is a representation of the input. In such a network there is no substantial output side.

If the network has higher global output dimensionality than global input dimensionality then the signifier divide is generally closer to the input side. Once again you can set up networks with no effective input side. For example, consider the generator network of GANs, it starts with random noise and eventually produces a “believable” image. There is no substantial input side in this network.

The signifier divide will be of interest for the rest of the paper. We will study how different representational types affect this divide, and if we can isolate it in any meaningful manner.

Representational Niche: Recognition, Prescription, and Response.

We have asserted that if we discount looping information structures, there is a divide in all pathways leading from global input to global output. The divide separates representations derived from the global input and representations derived from the global output. We can use this divide to describe a few broad niches.

First, we have asserted that there is a set of neurons which represent external phenomena either in the global input or global output. We call this broad niche: the representational niche.

Representational niche: Value in representing variation / features within the global input or output.

Within the representational niche we can subdivide neurons into three broad general groupings based on where they lie of the signifier divide. We have termed the following names for these specializations.

Recognition niche – Value in recognizing and representing parts of the global input.

Response niche – Value in representing and altering parts of the global output.

Prescription niche – Value in connecting recognitions on the input side to responses on the output side.

Inouts within the Recognition and Response specialization are defined by their connection to the global input and global output respectively. Inouts within the prescription specialization include functions from both the recognition and response specialization.

You could argue that the prescription specialization is redundant. We could think of inouts that connect input recognitions to output responses as inouts that have dual roles within the representational and Response niches. This is true; however we believe it useful to have a label for such inouts.

Less clear is the nature of these inouts which bridge the gap from recognition to response. Is the transition continuous or discrete? What would it mean for a representation to represent both features within the global input and behavioral responses in the global output?

The Helper niche

The recognition, response, and prescription niche all fit within the representational niche. The representational niche was defined by representing variation or features within the global input or output. A question arises from this. Are there any inouts that do NOT represent variation within the global input or output?

According to our general learning network model all value derives from successfully mapping global input to output and there are no other sources of external variation that affect the network. Any inout providing some other source of value would therefore have to perform some sort of helper function without representing external variation itself.

We could therefore hypothesize about a helper niche. You could argue that this helper specialization should be represented because of empirical evidence. Every learning network, we know about, has niche helper functions. The economy has entire industries that focus on maintaining products that perform valuable functions. Massive portions of the Gene Regulatory Network functionally maintain and preserve the internal conditions that allow for optimal input to output mappings. If these learning networks have helper niches perhaps it is likely that neural networks also have neurons which optimize into helper functions.

We hypothesize that helper functions are universally true within Learning Networks of sufficient complexity because being “helpful” always returns a better measurement score. An assorted array of specializations may act in this capacity, where they do not exactly recognize input or produce output responses, but they generally just make things easier for the inouts which do.

However, defining this niche as 100% separate from the representational niche is likely difficult. Wherever you define helper functions, some representational capacity is generally present. You should think of this niche as exactly that, a niche… a direction of value. A direction of value that acts upon representations without that action relating to the representations themselves.

Some possible examples of the helper specialization within neural networks.

- Noise reduction – Reducing noise or unrelated signals within a channel of information.

- Robustness - value in multiple pathways when one may be compromised.

- Pass along – value in passing along information without any transformation or recognition. This is sometimes valuable as to work around architectural constraints.

These helper specializations are important to consider but are not a core part of the input-to-output mapping process (by definition). Perhaps most notable is the idea that these helper specializations may mess with our identifying of other specialized inouts because they may share similar distinguishing features.

5. Measurable differences relating to neural representation.

“You know there is a sense behind this damn thing, the problem is to extract it with whatever tools and clues you have got.” – Richard Feynmann

Our top-down deductions led us to define the representational niche and the helper niche. Within the representational niche, we took the causational input-to-output mapping of learning networks and split this function into two broad steps. Recognition and response. Additionally, we defined a third niche: the prescription niche, to encapsulate any inouts bridging the gap between recognition and response.

Now we pursue a different direction of distinguishing specializations. Here we distinguish different niches by any means we can measurably identify. We take neural networks and see by which means we can distinguish form and function.

This direction immediately hits a brick wall. The matrices of neural networks seem inscrutable. So how do we proceed?

We will do the following.

Describe a set of measurable differences.

Define extreme poles along those measurable dimensions.

These extreme poles will help us define extreme archetypal solutions.

We can then deduce from the structure of these extreme archetypal solutions how they may or may not be valuable. This will allow us to define niches of value according to measurable dimensions.

This methodology has a few major caveats.

We drastically simplify the set of possible neurons here into a few main groupings. The benefit and curse of this is that it hides a lot of complexity.

The extreme archetypal solutions are architypes, real neurons/inouts may not actually become so specialized in these structural directions. Instead, real neurons/inouts may tend towards being combinations of these architypes.

The choice of measurable differences has a large impact on the final structural demarcations. We have chosen measurable differences that we believe are most relevant. Others may debate for other measurable differences and come to different conclusions.

This methodology requires a deduction step which can be argued over. As with all deductions, it should be clearly stated, and subject to criticism.

The major advantage of this method is that it gives us a direction! With this method we can start dividing up and understanding neural networks inscrutable matrices. With this method, we hope to build a language with which we can consider different groupings of inouts based on their functional role.

Our other objective here is to establish a framework that transitions from theoretical postulates to measurable predictions, thereby paving the way for future empirical investigations. It's important to note that this paper does not conduct empirical measurements but instead aims to set the theoretical groundwork for such endeavors. We encourage teams working on AI alignment or interpretability to consider pursuing this avenue of measurements.

Towards this end, as we discuss these measurable differences, we will suggest a broadly defined software solution that can make these measurements. We briefly outline the processes and measurements we envision this software taking. We do not go into specifics however, as a lot of this work requires iterative improvement with feedback.

To be clear, our theoretical work does not require these measurements to be measurable, only understandable, and clear. We do of course, however, want these measurements to be measurable so that we can make measurable predictions.

Requirements for useful measurements to distinguish specialized neural structures

Each measurement needs to give us information about the input-to-output mapping of a neural inout.

The measurement needs to relate to learned relationships.

Each measurement needs to add new information and cannot be assumed from the other measurements.

These requirements make sense because it is the learned input to output mapping that is valuable to the loss function. It is the learned input to output mapping which specializes. It is the learned input to output mapping which produces representations.

As a counter factual you could imagine considering “how many inputs a sub-neural inout has?” as a measurement. This is superficially useful but because this only relates to the inputs and does not describe the relationship between the inputs and output, it is only of limited help in describing how the inout has specialized.

Preconditions for Effective Measurement

Before delving into the metrics, it's essential to establish the preconditions under which these metrics are valid.

Sufficient Network Training

A Learning Network must be adequately trained to ensure that the connections we intend to measure are genuinely valuable according to the loss function. This precondition is non-negotiable, as it lays the foundation for any subsequent analysis.

Pruning Non-Valuable Connections